최근 컴퓨터의 발전이 한계에 도달했다. 인공지능, 가상현실 등의 발달로 처리할 데이터양은 날로 느는 반면, 더는 소자를 작게 하는 것이 어려워졌기 때문이다. 소자를 원자 하나 이하로 구현하기는 불가능하다. 또한, 원자 단위의 미시세계에서는 예기치 못했던 문제들이 발생하기 때문에 이제 기존 방식으로는 성능을 높일 수 없다. 이에 양자역학의 원리에 따라 작동되는 양자 컴퓨터가 대안으로 떠오르고 있다.

인공지능, 가상현실 등의 발달로 처리할 데이터양은 늘어가는데 집적회로의 한계는 가까워지고 있다. 그래서 트랜지스터로 만들어진 게이트 대신 양자역학의 원리를 연산법칙으로 사용하는 양자 컴퓨터가 대안으로 떠오르고 있다. 도대체 양자가 뭔지, 또 그걸로 어떻게 하여 대안이라는 걸까? 과학과 인연이 없던 기자가 양자부터 최근 화제가 되는 양자 컴퓨터까지, 배우는 마음으로 차근차근 들여다본다.

기존 컴퓨터의 작동 원리

컴퓨터(computer)는 말 그대로 계산(compute)하는 기계다. 컴퓨터 계산기로서, 우리가 흔히 쓰는 계산기와 다른 점은 더욱 크고 다양한 계산이 가능하다는 것이다.

컴퓨터의 언어는 0과 1 뿐이다

컴퓨터는 컴퓨터 안 소자의 상태를 달리하여 숫자를 표현한다. 이를 쉽게 하도록 컴퓨터는 2진법을 사용한다. 흔히 쓰는 10진법을 컴퓨터에 쓰지 않는 이유는 간단하다. 소자의 상태를 10가지나 필요하기 때문이다. 2진법을 쓰면 소자의 상태는 2가지면 충분하다. 반도체 다이오드가 닫혀있으면 0, 열려있으면 1이다. 하나의 다이오드는 0 아니면 1의 값만을 가진다. 이 기본 단위가 비트다.

2진법으로도 모든 정수를 표현할 수 있다. 길게 늘어선 다이오드가 각각 0과 1을 표시하면 큰 수를 표현할 수 있으며, 여러 줄로 늘어선 다이오드는 많은 수를 표현할 수 있다. 이렇듯 많은 다이오드를 각각 0과 1을 표시한 상태로 놔두면 크고 많은 수를 기억할 수 있다. 이것이 바로 HDD와 SSD 같은 기억 장치다.

다이오드의 열림과 닫힘, 즉 스위칭(switching)은 또한 연산을 가능케 한다. 컴퓨터의 가장 기본적인 명령은 덧셈이다. 곱셉은 덧셈의 누적이며 뺄셈과 나눗셈은 덧셈과 곱셉을 거꾸로 한 것이다. 기억 장치가 기억한 수를 불러와 다이오드의 스위칭 상태를 어떠어떠하게 바꾸라는 어떤 명령을 처리 장치에 내리는 것으로 연산이 이뤄진다. 바로 CPU에서 일어나는 일들이다.

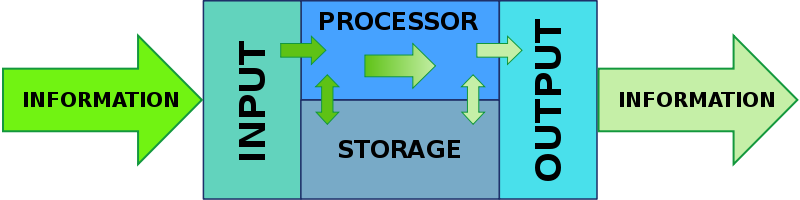

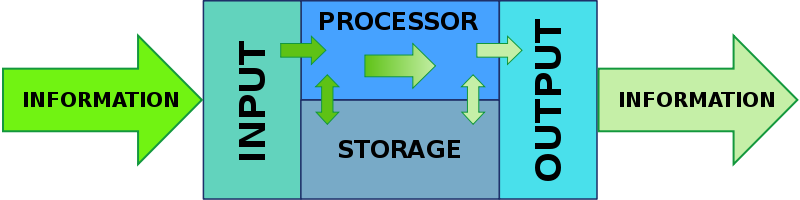

컴퓨터의 정보 흐름

컴퓨터는 숫자들을 다른 숫자로 바꾸는 일을 할 뿐이다. 컴퓨터는 입력 비트들을 출력 비트들로 바꾸는 계산기라 할 수 있다. 컴퓨터는 반도체의 집적도가 2년마다 2배로 늘어난다는 무어의 법칙에 따라 눈부신 발전을 거듭했다.

그러나 최근 컴퓨터의 발전이 한계에 도달했다. 인공지능, 가상현실 등의 발달로 처리할 데이터양은 날로 느는 반면, 더는 소자를 작게 하는 것이 어려워졌기 때문이다. 소자를 원자 하나 이하로 구현하기는 불가능하다. 또한, 원자 단위의 미시세계에서는 예기치 못했던 문제들이 발생하기 때문에 이제 기존 방식으로는 성능을 높일 수 없다.

이에 양자역학의 원리에 따라 작동되는 양자 컴퓨터가 대안으로 떠오르고 있다.

양자 컴퓨터의 고안

1959년 12월, 물리학자 리처드 파인만은 미국 물리학회에서 ‘바닥에는 여지가 많다(There’s Plenty of Room at the Bottom)’라는 제목의 강연을 했다. 그 자리에서 파인만은 일련의 발언들을 통해 양자역학의 원리를 이용한 연산에 대한 가능성을 엿봤다.

시간이 흘러 1981년, 파인만은 MIT와 IBM이 공동 주최한 컨퍼런스에서 "자연은 고전적이지 않다. 그러니 자연을 시뮬레이션하려면 양자역학적으로 해야 할 것이다"라며 양자 컴퓨터의 아이디어를 제시했다.

1989년, 이스라엘 출신으로 영국에서 활동하는 물리학자인 데이비드 도이치는 양자역학의 원리에 따라 작동되는 컴퓨터를 제안한다.

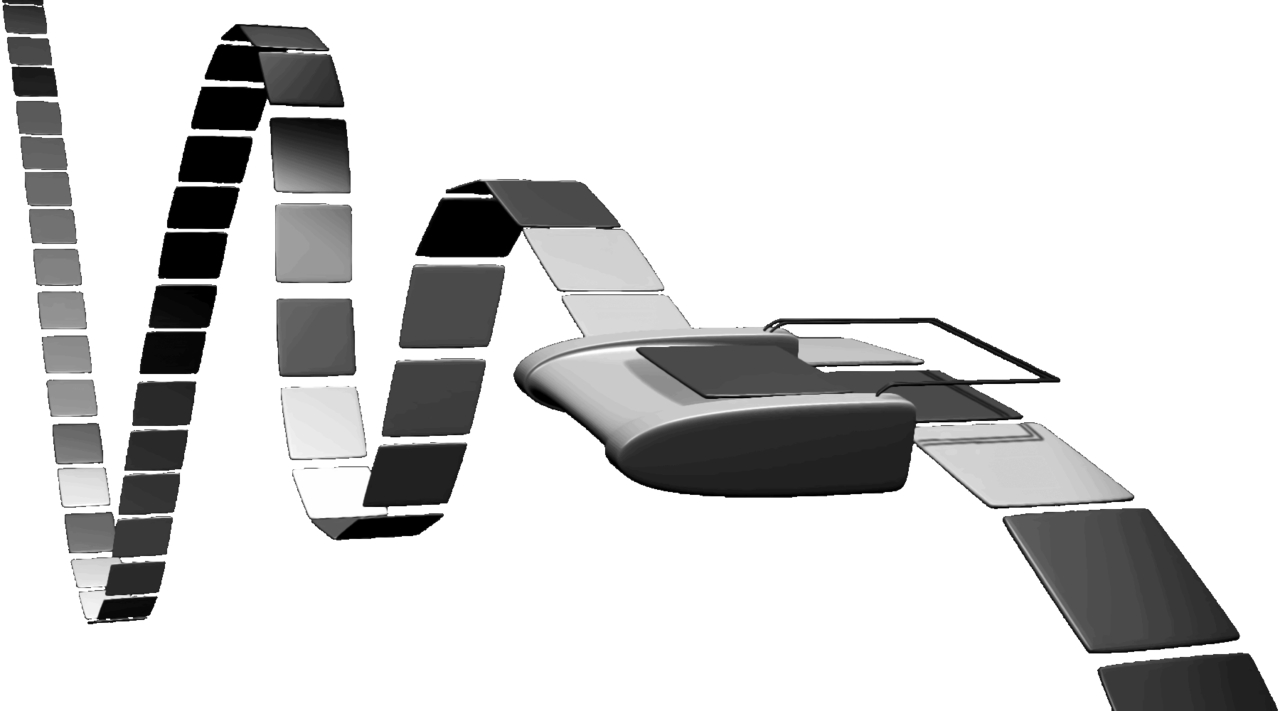

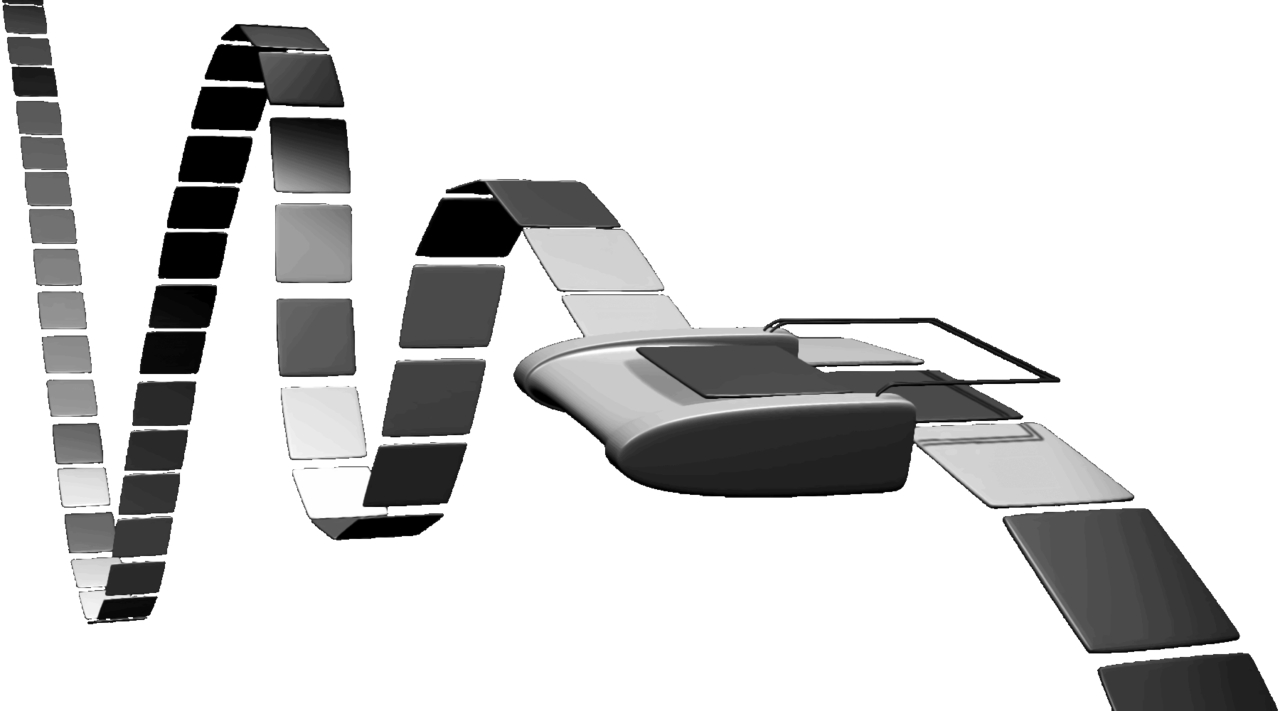

튜링 기계의 작동 방식

과거 영국의 수학자 앨런 튜링은 튜링 기계를 고안했다. 튜링 기계는 1차원 형태의 테이프에 기록된 0과 1의 나열을 입력받아 모든 일을 처리하는 기계다. 튜링은 이 기계를 통해 수학적인 모든 조작을 구현할 수 있다고 생각했다. 이런 튜링 기계는 범용 기계라 불린다.

도이치는 튜링 기계의 개념을 수학에서 물리학으로 확장했다. 모든 물리적 현상을 범용 기계로 묘사할 수 있을 것으로 생각한 것이다. 범용 기계는 양자역학을 구현해야 하므로 양자 컴퓨터가 되어야 한다.

양자 컴퓨터의 원리

양자역학 원리를 이용한 양자 컴퓨터는 기존의 컴퓨터, 즉 고전 컴퓨터와 전혀 다른 원리로 가동된다. 양자역학의 불확정성 원리는 서로 다른 특징을 갖는 상태의 중첩 때문에 측정값이 확률적으로 주어지게 된다. 이를 이용한 양자 컴퓨터는 하나의 비트가 동시에 0과 1을 갖는 것을 허용한다. 양자 컴퓨터의 이 비트를 퀀텀 비트(quantum bit), 줄여서 큐비트(Qbit)라고 부른다.

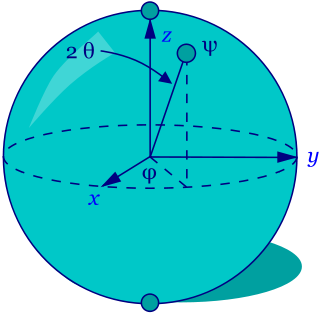

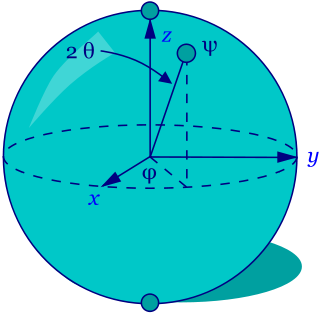

큐비트는 보통 ‘블로흐 구’라는 그래프로 설명한다. 중첩과 같은 현상은 우리가 사는 거시세계에서의 경험과 이질적이라 적당한 시각화 방법이 없다. 블로흐 구도 그저 일상적인 경험에 비추어 이해할 수 있도록 도식화한 것이다.

양자 컴퓨터의 기초인 큐비트를 그림으로 나타낸 블로흐 구 모형

블로흐 구에서, 구의 표면은 두 가지 결과에 대한 확률값의 합이 1인 점으로 가능한 모든 사건이 일어나는 경우를 확률적으로 표현한 것이다.

비트는 구의 양극에 해당하는 0과 1의 값만 지닐 수 있지만, 큐비트는 구의 어느 곳에도 존재할 수 있다. 북극과 남극을 제외한 나머지 표면에는 0과 1, 두 가지 사건에 대한 확률이 공존한다.

A라는 물체가 B에 있을 확률이 10%라면, 10번 중 한 번은 B 장소에 있고 나머지 9번은 B에 없다는 뜻이다. 이때 A는 결코 같은 시간에 두 곳에 존재할 수 없다. A가 B에서 발견된다면 A는 다른 곳에는 존재하지 않는다. 그러나 미시세계에서는 A가 B와 B가 아닌 곳에 '동시에' 존재한다. 다만 그 존재 확률이 B에서는 10%로 나타날 뿐이다.

큐비트는 데이터를 병렬적으로 동시에 처리할 수도 있으며, 수가 늘어날수록 처리 가능한 정보량도 기하급수적으로 늘어난다. 2개의 큐비트는 ‘00, 01, 10, 11’의 4가지 형태를 중첩하는 것이 가능하고 n개의 큐비트는 2n만큼 가능하다. 따라서 입력 정보량의 병렬 처리로 연산 속도는 기존의 디지털 컴퓨터와 비교할 수 없을 만큼 빨라진다.

양자 컴퓨터의 활용성

1994년, 미국의 이론 컴퓨터 과학자 피터 쇼어는 양자 소인수 분해 알고리즘이 고전 정보 이론의 경우보다 엄청나게 효율적이란 사실을 증명한다. 그는 쇼어 알고리즘을 제시하여, 양자 컴퓨터를 이용한 임의의 정수를 다항 시간 안에 소인수 분해하는 방법을 발표하였다.

현재 널리 쓰이는 RSA 암호는 공개키 암호 체계의 하나다. 암호화뿐만 아니라 전자서명이 가능한 최초의 알고리즘으로 알려져 있다. 소인수 분해에 기반을 두는 이 암호 체계는 작은 수의 소인수 분해는 어렵지 않지만 큰 수는 어렵다는 것에 기반을 두고 있다. 큰 수의 소인수 분해를 획기적으로 빠르게 할 수 있는 알고리즘이 발견된다면 이 암호 체계는 가치가 떨어질 것이다.

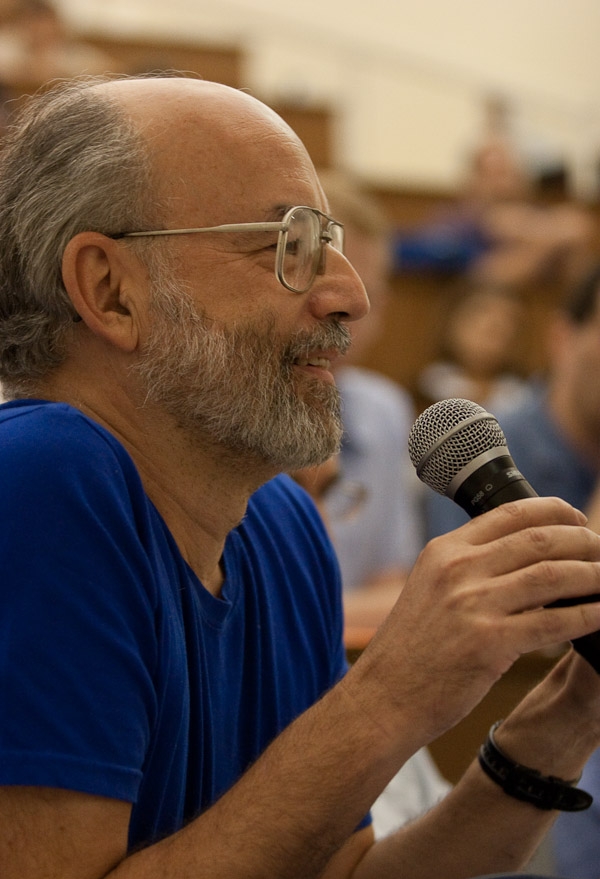

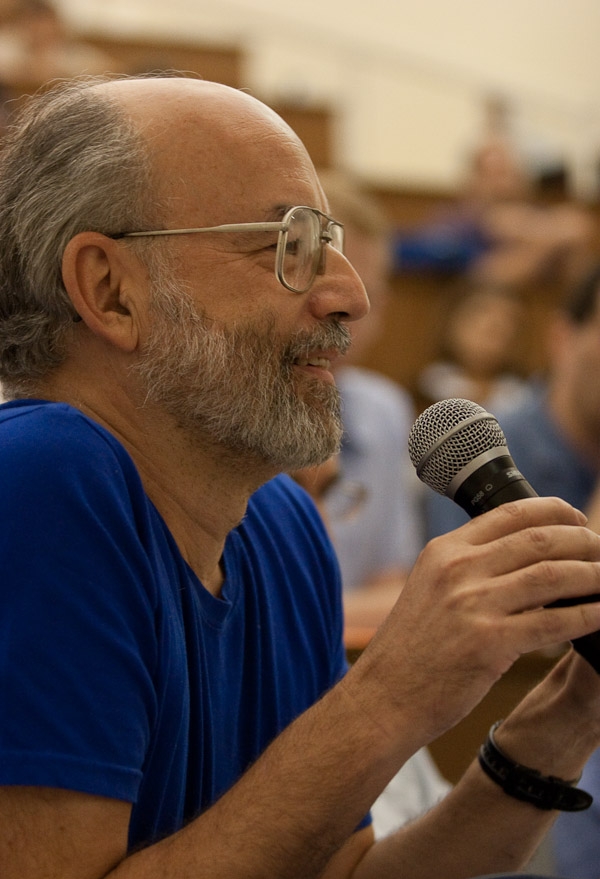

RSA의 'S'를 담당하는 아디 사미르

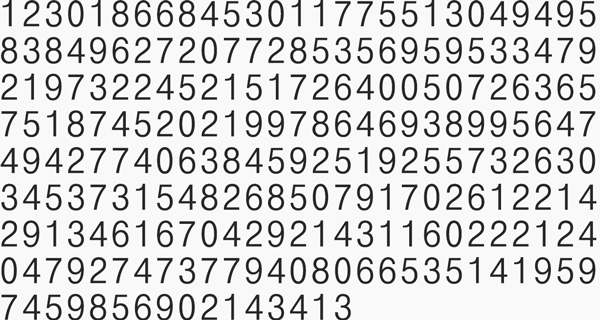

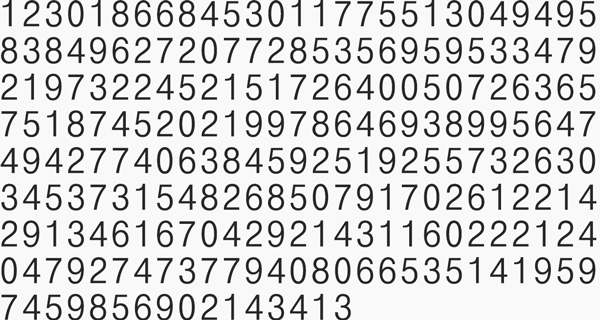

RSA-768이라 불리는 이

합성수는

이 두 소수의 곱으로 이루어져 있다.

이 소인수 분해를 위해 병렬로 연결된 수백 개의 컴퓨터가 2년 동안 사용되었다. 만약 AMD 옵테론 2.2GHz 싱글코어 컴퓨터로 처리했다면 2000년이 걸렸을 것이라고 소인수 분해 팀은 추측했다.

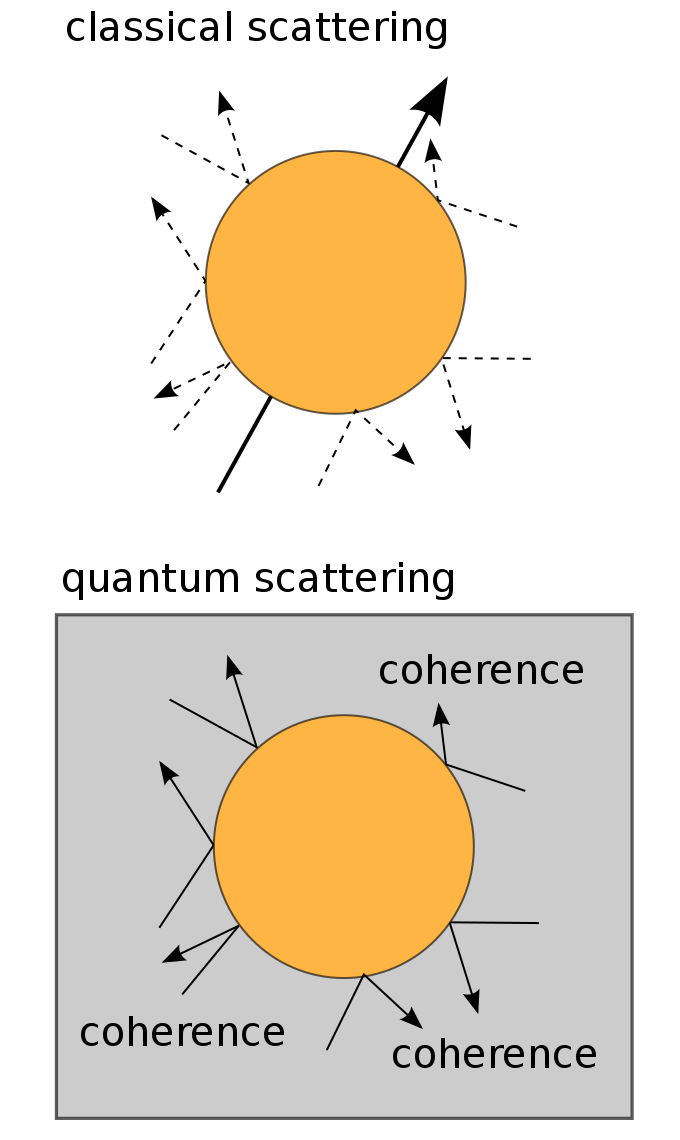

양자 컴퓨터의 가장 큰 특징은 중첩 상태를 이용한다는 것이다. 따라서 동시다발적 처리가 가능하다. 양자 컴퓨터는 중첩 상태가 유지되는 과정을 통해 연산을 수행하고 최종적으로 측정하여 결과를 얻는다.

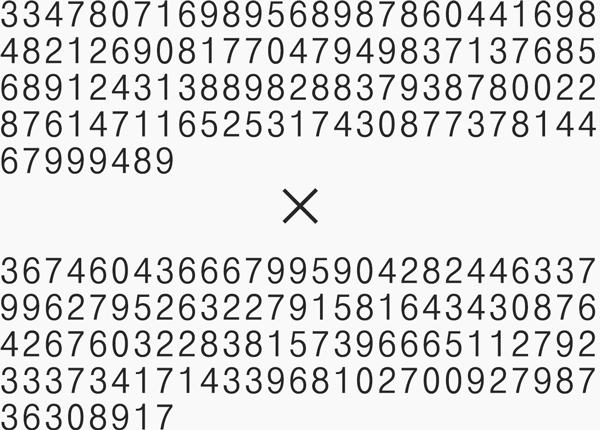

양자역학의 세계에선 관측도 관측 대상에 영향을 미친다

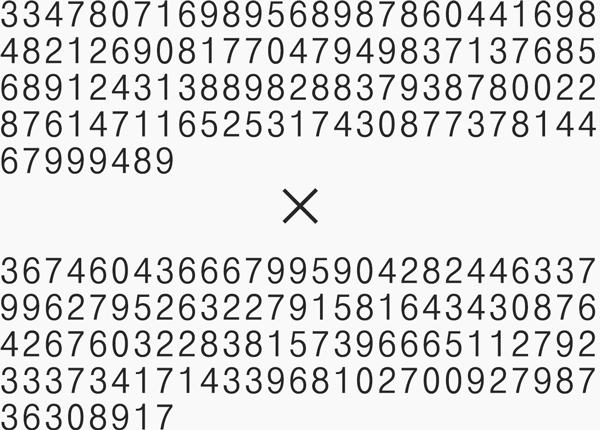

문제는 중첩이 유지되기 위해서는 결어긋남을 막아야 한다. 측정 당하지 말아야 한다는 것이다. 그러나 결어긋남을 피하는 것은 매우 어렵다.

컴퓨터 작업은 ‘순차적 작업’과 ‘조건 분기’의 조합이다. 조건 분기는 어떤 특정한 조건이 발생한 경우, 거기에 속하는 지시사항의 실행 여부를 결정하는 일이다. 그러나 지시사항의 실행 여부를 결정하기 위해서는 특정한 조건이 발생했는지 알아야 하고, 이는 관측이므로 결어긋남이 발생한다. 즉 측정하지 않고 지시사항의 실행 여부를 결정하는 방법이 필요하다.

다음 기사에서는 그 방법과 양자 컴퓨터의 상용화에 대해 알아보겠다.