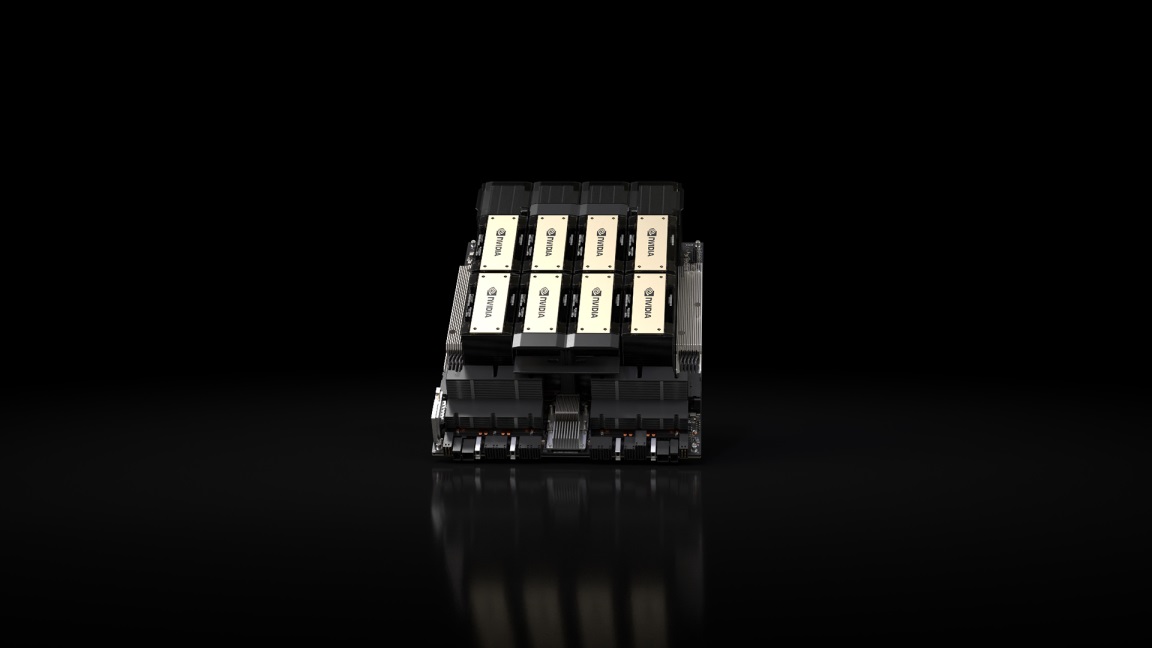

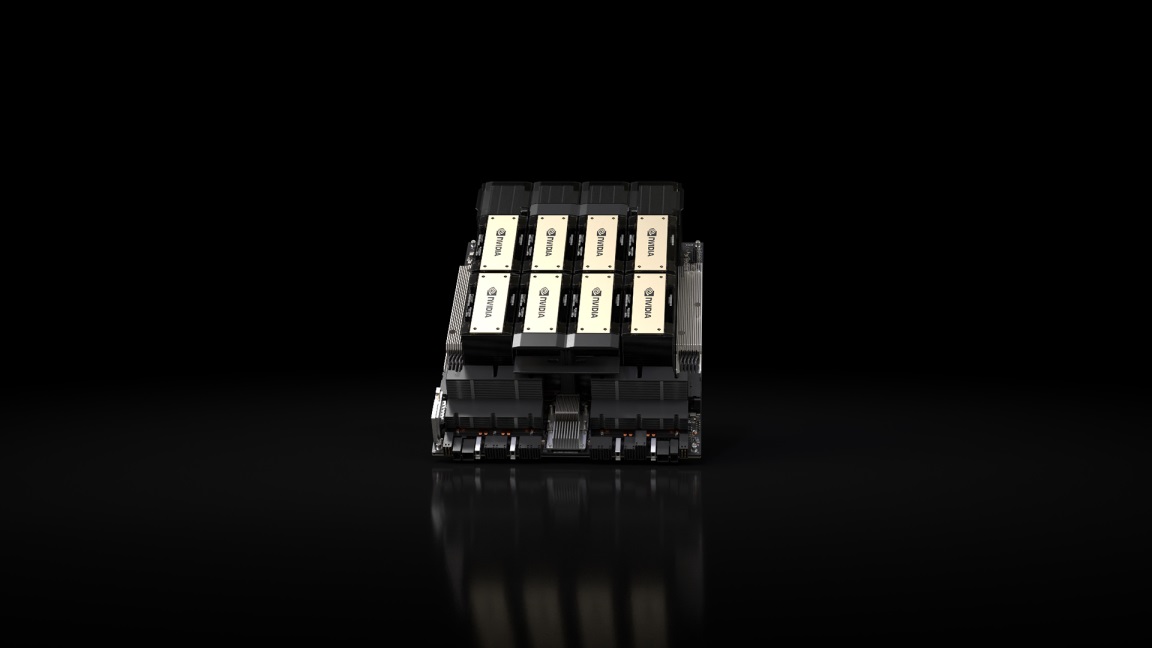

▲엔비디아(NVIDIA) HGX H200(사진:엔비디아)

HGX H200 시스템·클라우드 인스턴스 출시 예정

생성형 AI 시대를 맞이하며 몸값을 높이고 있는 엔비디아가 차세대 데이터센터 및 AI 컴퓨팅용 GPU를 선보였다.

AI 컴퓨팅 기술 분야의 선두주자인 엔비디아가 엔비디아(NVIDIA) HGX H200을 출시한다고 14일 밝혔다.

이 플랫폼은 엔비디아 호퍼(Hopper™) 아키텍처를 기반으로 고급 메모리가 내장된 엔비디아 H200 텐서 코어 GPU(H200 Tensor Core GPU)를 탑재하고 있으며 생성형 AI와 고성능 컴퓨팅 워크로드를 위한 방대한 양의 데이터를 처리할 수 있는 것으로 전해졌다.

엔비디아 H200은 HBM3e를 제공하는 최초의 GPU로 HBM3e은 더 빠르고 대용량 메모리로 생성형 AI와 대규모 언어 모델의 가속화를 촉진하는 동시에 HPC 워크로드를 위한 과학 컴퓨팅을 발전시킨다. 엔비디아 H200은 HBM3e를 통해 초당 4.8테라바이트(Terabytes)의 속도로 141GB의 메모리를 제공하며, 이전 모델인 엔비디아 A100에 비해 거의 두 배 용량과 2.4배 더 많은 대역폭을 제공한다.

HBM3e는 국내 메모리 2강 중 1강인 SK하이닉스가 해당 기술에서 선두를 달리며 엔비디아에 독점공급하고 있는 것으로 알려져 있다.

세계 유수 서버 제조업체와 클라우드 서비스 제공업체의 H200 기반 시스템은 2024년 2분기에 출시될 예정이다.

엔비디아 하이퍼스케일과 HPC 담당 부사장인 이안 벅(Ian Buck)은 "생성형 AI와 HPC 애플리케이션으로 인텔리전스를 생성하기 위해서는 대규모의 빠른 GPU 메모리를 통해 방대한 양의 데이터를 빠르고 효율적으로 처리해야 한다"며 "업계 최고의 엔드투엔드 AI 슈퍼컴퓨팅 플랫폼인 엔비디아 H200을 통해 세계에서 가장 중요한 과제를 해결할 수 있는 속도가 더욱 빨라졌다"고 말했다.

엔비디아 H200은 4개(four-way)와 8개(eight-way) 구성의 엔비디아 HGX H200 서버 보드에서 사용할 수 있으며, HGX H200 시스템의 하드웨어와 소프트웨어와 모두 호환된다. 또한 8월 발표된 HBM3e를 탑재한 엔비디아 GH200 그레이스 호퍼™ 슈퍼칩(GH200 Grace Hopper™ Superchip)이 포함된다.

또한 엔비디아 글로벌 파트너 서버 제조업체 에코시스템은 기존 시스템을 H200로 업데이트할 수 있다. 파트너사로는 애즈락랙(ASRock Rack), 에이수스(ASUS), 델 테크놀로지스(Dell Technologies), 에비덴(Eviden), 기가바이트(GIGABYTE), 휴렛팩커드 엔터프라이즈(Hewlett Packard Enterprise), 인그라시스(Ingrasys), 레노버(Lenovo), QCT, 슈퍼마이크로(Supermicro), 위스트론(Wistron)과 위윈(Wiwynn) 등이 포함된다.

아마존웹서비스(Amazon Web Services), 구글 클라우드(Google Cloud), 마이크로소프트 애저(Microsoft Azure)와 오라클 클라우드 인프라스트럭처(Oracle Cloud Infrastructure)는 내년부터 코어위브(CoreWeave), 람다(Lambda), 벌쳐(Vultr)에 이어 H200 기반 인스턴스를 배포하는 최초의 클라우드 서비스 제공업체 중 하나가 될 것이다.

HGX H200은 엔비디아 NV링크™(NVLink)와 NV스위치™(NVSwtich™) 고속 인터커넥트를 기반으로 한다. 1,750억 개 이상의 파라미터가 포함된 대규모의 모델에 대한 LLM 훈련과 추론 등 다양한 애플리케이션 워크로드에서 최고의 성능을 제공한다. 8개 방식으로 구동되는 HGX H200은 32페타플롭(Petaflops) 이상의 FP8 딥 러닝 컴퓨팅과 총 1.1TB의 고대역폭 메모리를 제공한다. 이를 통해 생성형 AI와 HPC 애플리케이션에서 최고의 성능을 발휘한다.

한편, 엔비디아 가속 컴퓨팅 플랫폼은 개발자와 기업이 AI에서 HPC에 이르기까지 즉시 생산이 가능한 애플리케이션을 구축하고 가속화할 수 있는 강력한 소프트웨어 도구로 지원된다. 여기에는 음성, 추천 시스템, 하이퍼스케일 추론과 같은 워크로드를 위한 엔비디아 AI 엔터프라이즈(AI Enterprise) 소프트웨어 제품군이 포함된다.

엔비디아 H200은 2024년 2분기부터 글로벌 시스템 제조업체와 클라우드 서비스 제공업체로부터 공급될 것이라고 밝혔다.