UNIST(총장 이용훈) 신소재공학과 김지윤 교수팀이 실시간으로 감정인식을 할 수 있는 ‘착용형 인간 감정인식 기술’을 세계 최초로 개발했다.

▲(왼쪽부터)김지윤 UNIST 교수, 제1저자 이진표 연구원

감정 기반 맞춤형 서비스 등 다양한 응용 전망

인간의 감정을 실시간으로 인식할 수 있는 기술이 개발됐다. 감정을 기반으로 서비스를 제공하는 차세대 착용형 시스템 등 다양하게 응용될 것으로 기대된다.

UNIST(총장 이용훈) 신소재공학과 김지윤 교수팀은 실시간으로 감정인식을 할 수 있는 ‘착용형 인간 감정인식 기술’을 세계 최초로 개발했다고 29일 밝혔다.

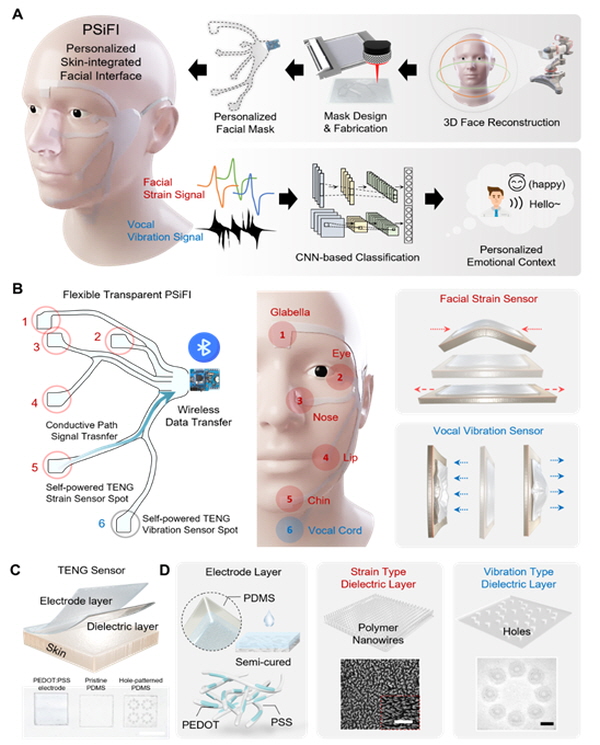

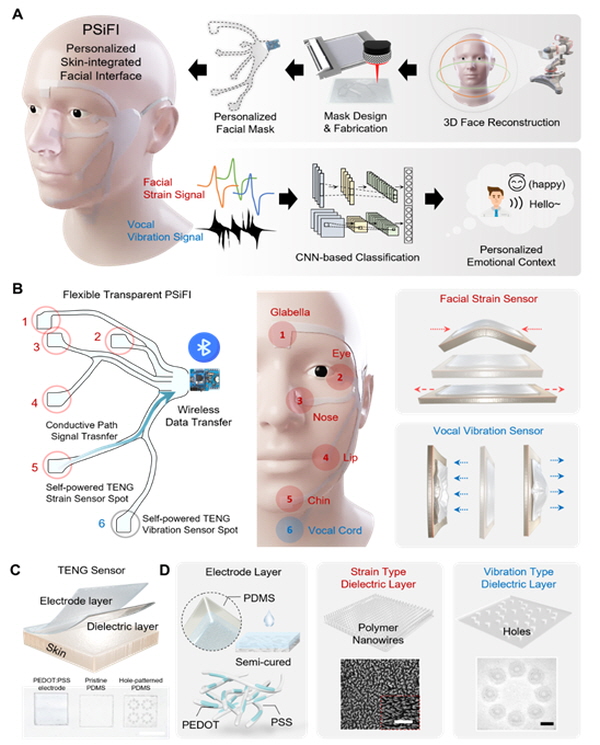

개발된 시스템은 두 물체가 마찰 후 분리될 때 각각 양과 음의 전하로 분리되는 ‘마찰 대전’현상을 기반으로 제작됐다. 자가 발전 또한 가능해 데이터를 인식할 때 추가적인 외부전원이나 복잡한 측정장치가 필요하지 않다.

김지윤 교수는 “이러한 기술을 토대로 개인에게 맞춤형으로 제공 가능한 피부통합 얼굴 인터페이스(PSiFI) 시스템을 개발했다”고 덧붙였다.

연구팀은 말랑말랑한 고체상태를 유지하는 반경화 기법을 활용했다. 이 기법으로 높은 투명도를 가진 전도체를 제작해 마찰 대전 소자의 전극에 활용했다. 다각도 촬영기법으로 개인 맞춤형 마스크도 제작했다. 자가 발전이 가능하고 유연성과 신축성, 투명성을 모두 갖춘 시스템을 만든 것이다.

연구팀은 얼굴 근육의 변형과 성대 진동을 동시에 감지하고 이 정보들을 통합하여 실시간으로 감정을 인식을 할 수 있도록 시스템화했다. 이렇게 얻어진 정보를 이용해 사용자의 감정을 기반으로 맞춤 서비스를 제공할 수 있는 가상현실 ‘디지털 컨시어지’에 활용이 가능하다.

제1 저자 이진표 박사후 연구원은 “이번에 개발한 시스템으로 복잡한 측정 장비 없이 몇 번의 학습만으로 실시간 감정인식을 구현 하는게 가능하다”며 “앞으로 휴대형 감정인식 장치 및 차세대 감정 기반 디지털 플랫폼 서비스 부품에 적용될 가능성을 보여줬다”고 말했다.

연구팀은 개발한 시스템으로 ‘실시간 감정 인식’ 실험을 진행했다. 얼굴 근육의 변형과 목소리와 같은 멀티모달 데이터들을 수집하는 것뿐 아니라 수집된 데이터를 활용할 수 있는 ‘전이 학습’도 가능했다.

개발된 시스템은 몇 번의 학습만으로도 높은 감정 인식도를 보여줬다. 개인 맞춤형으로 제작되고 무선으로 사용할 수 있어 착용성과 편리함도 확보했다.

연구팀은 시스템을 VR 환경에도 적용해 ‘디지털 컨시어지’로 활용했다. 스마트홈, 개인 영화관, 스마트 오피스 등 다양한 상황을 연출했다. 상황별 개인의 감정을 파악해 음악이나 영화, 책 등을 추천해줄 수 있는 사용자 맞춤형 서비스가 가능함을 한 번 더 확인했다.

김지윤 신소재공학과 교수는 “사람과 기계가 높은 수준의 상호작용을 하기 위해서는 HMI 디바이스 역시 다양한 종류의 데이터들을 수집해 복잡하고 통합적인 정보를 다룰 수 있어야 한다”며 “본 연구는 차세대 착용형 시스템을 활용해 사람이 가지고 있는 아주 복잡한 형태의 정보인 감정 역시 활용이 가능하다는 것을 보여주는 하나의 예시가 될 것”이라고 전했다.

이번 연구는 싱가포르 난양공대 신소재공학과 Lee Pooi See 교수와의 협업으로 진행됐다. 세계적 권위의 국제학술지인 네이처 커뮤니케이션즈 (Nature Communications)에 1월 15일 온라인 게재됐다. 연구 수행은 과학기술정보통신부 한국연구재단 (NRF), 한국재료연구원 (KIMS)의 지원을 받아서 이뤄졌다.

▲멀티모달 정보 기반 개인 맞춤형 감정 인식 무선 안면 인터페이스 개념도