AI 제품들이 시장에서 소비자 호응을 얻고 있는 가운데 증가하는 AI발 데이터 전송 및 컴퓨팅 파워에 기인한 AI 서버용 제품들이 속속 등장하고 있다.

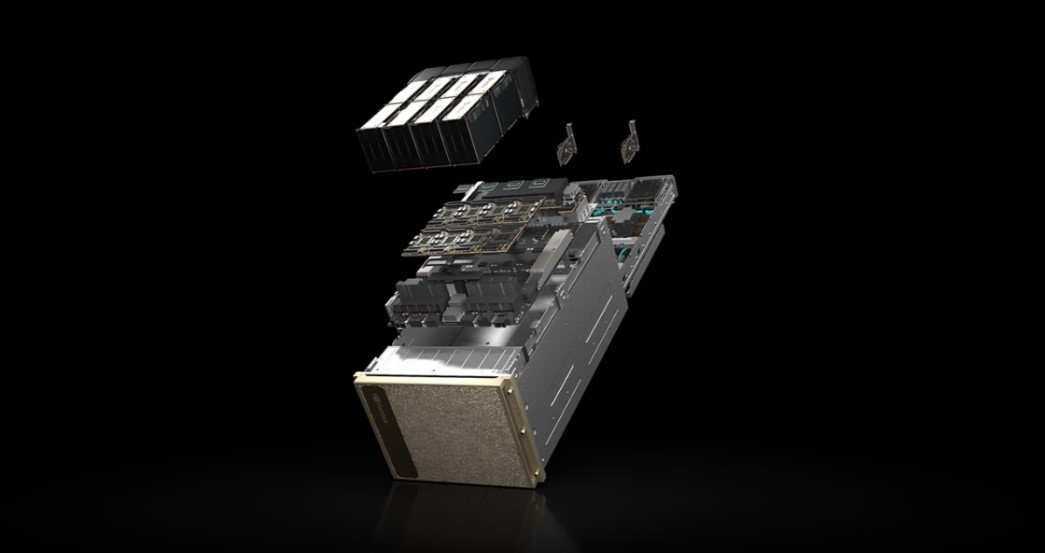

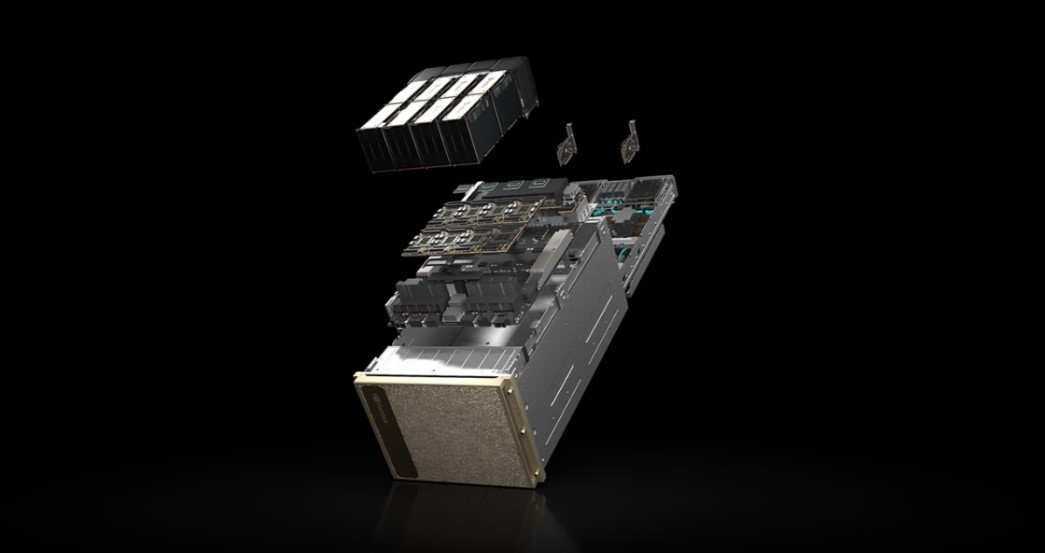

▲엔비디아(NVIDIA) DGX H100 시스템(이미지:엔비디아)

이전 세대比 성능 6배↑, 연결 속도 2배↑

스마트 주차·생성형 AI·LLM·헬스케어 공략

AI 제품들이 시장에서 소비자 호응을 얻고 있는 가운데 증가하는 AI발 데이터 전송 및 컴퓨팅 파워에 기인한 AI 서버용 제품들이 속속 등장하고 있다.

엔비디아가 엔비디아(NVIDIA) DGX H100 시스템 상용화를 시작했다고 3일 밝혔다. 올해 초 본격 출시를 알린 최신·최고 사양 데이터센터용 제품인 DGX H100 시스템이 AI 시장 경쟁 상황 속에서 다양한 고객사에서 상용화가 시작된 것으로 전해진다.

DGX H100 시스템은 엔비디아 H100 GPU가 총 8개가 탑재돼 있으며, 각 GPU에는 생성형 AI 모델을 가속화하도록 설계된 트랜스포머 엔진(Transformer Engine)이 탑재돼 있다. 이전 세대 DGX A100보다 평균 약 6배 더 뛰어난 성능을 제공하는 DGX H100 시스템은 최대 640GB의 총 GPU 메모리를 탑재했으며, GPU당 엔비디아 NVLink 18개 및 900GB/s의 GPU 간 양방향 대역폭을 제공한다.

신규 시스템은 400Gbps 네트워크 인터페이스를 2개 탑재해 이전 세대보다 2배 빠른 속도의 초저지연 엔비디아 퀀텀 인피니밴드(Quantum InfiniBand)를 사용해 수백개의 DGX H100 노드를 AI 슈퍼컴퓨터에 연결할 수 있다. 이전 세대보다 페타플롭 당 킬로와트 수는 2배 향상됐다.

전세계 스마트 제조 및 스마트 팩토리 등에서 활용 가능한 엔비디아 DGX H100 시스템은 △스마트 발렛파킹 △생성형 AI 및 LLM △헬스케어 솔루션 등 딥러닝 기술 기반의 다양한 애플리케이션에서 적용이 기대되는 제품이다.

DGX H100 시스템은 엔비디아 AI 엔터프라이즈(NVIDIA AI Enterprise) 소프트웨어가 포함돼 있어 데이터 사이언스 파이프라인을 가속화하고 생성형 AI, 컴퓨터 비전 등의 개발 및 배포를 간소화가 가능하다고 설명했다.

엔비디아 엔터프라이즈 컴퓨팅 담당 부사장인 마누비르 다스(Manuvir Das)는 MIT 테크놀로지 리뷰의 퓨처 컴퓨트(Technology Review’s Future Compute) 행사에서 강연을 통해 DGX H100 시스템의 상용화를 발표했다.

이미 고객사에선 DGX H100 시스템이 적용되거나 도입을 고려하는 단계인 것으로 전해진다. 로봇 공학 분야를 선도하는 보스턴 다이내믹스(Boston Dynamics) 산하 연구 기관인 보스턴 다이내믹스 AI 인스티튜트는 DGX H100을 사용한다고 밝혔다.

초기에 DGX H100이 로봇 공학의 핵심 기술인 강화 학습의 작업을 처리하며, 이후에는 실험실의 프로토타입 봇에 직접 연결해 AI 추론 작업을 실행할 예정이다. AI 연구소 알 리지 CTO는 “비교적 컴팩트한 공간에 고성능 컴퓨터가 탑재돼 있어, AI 모델을 쉽게 개발하고 배포할 수 있다”라고 덧붙였다.

독일에서는 딥엘(DeepL)이 일본 최대 출판사인 니케이를 비롯한 고객에게 제공하는 수십개 언어 간 번역과 같은 서비스를 확장하기 위해 여러대의 DGX H100 시스템을 사용할 예정이다. 딥엘는 최근 AI 글쓰기 도우미인 딥엘 라이트(DeepL Write)를 출시했다.

한편, 챗GPT 등 LLM 제품과 생성형 AI 서비스 제품 등을 통해 AI 시장이 활성화되고 있는 가운데 하드웨어 부문인 AI 서버 수요가 급격히 증가하고 있는 추세이다. AI 연산을 위한 고성능 서버용 GPU 수요가 증가하는 동시에 HBM3, DDR5 등 고용량 고성능 메모리 수요도 함께 올라가고 있다.