최근 인공지능 기술이 고도화되고 보편화되면서 클라우드 리소스를 사용하지 않는 온디바이스 AI 프로세싱 요구가 빠르게 증가하고 있다.

▲STM Edge AI 프로세싱 웨비나

(이미지:ST, e4ds EEWebinar)개발자 친화적 STM Edge AI 프로세싱

STM32 Cube.AI·나노엣지 AI 스튜디오

“ST MCU 기반 AI 솔루션·생태계 지원”

최근 인공지능 기술이 고도화되고 보편화되면서 클라우드 리소스를 사용하지 않는 온디바이스 AI 프로세싱 요구가 빠르게 증가하고 있다.

최근 e4ds EEWebinar에서는 ST마이크로일렉트로닉스가 STM Edge AI 프로세싱 웨비나를 발표했다.

이번 웨비나에서는 STM32 MCU에서 엣지 AI 프로세싱이 가능하도록 만들어주는 ST의 솔루션과 에코시스템에 관해 문현수 ST마이크로일렉트로닉스 과장이 관련 발표를 진행했다.

MCU는 대게 엣지 플랫폼이라고 불리는 곳에 많이 탑재되는데 개별 디바이스 및 시스템 안에서 종단부에 사용된다. 예컨대 센서 데이터를 취득해 호스트로 전송하는 역할, LED 및 모터 등을 제어하는 역할을 수행한다.

문현수 과장은 “임베디드 애플리케이션은 더 많은 데이터를 수집할 것이며 이로 인해 데이터 중심 분석과 데이터 기반 프로세싱에 대한 수요가 증가할 것이다”라고 전망하며 “IoT 장치의 확산으로 더 많은 데이터가 수집되며 이를 엣지 AI 프로세싱으로 실시간 처리 및 분석해 △스마트 시티 △스마트홈 △산업자동화 등 광범위한 애플리케이션 구현이 가능하다”고 설명했다.

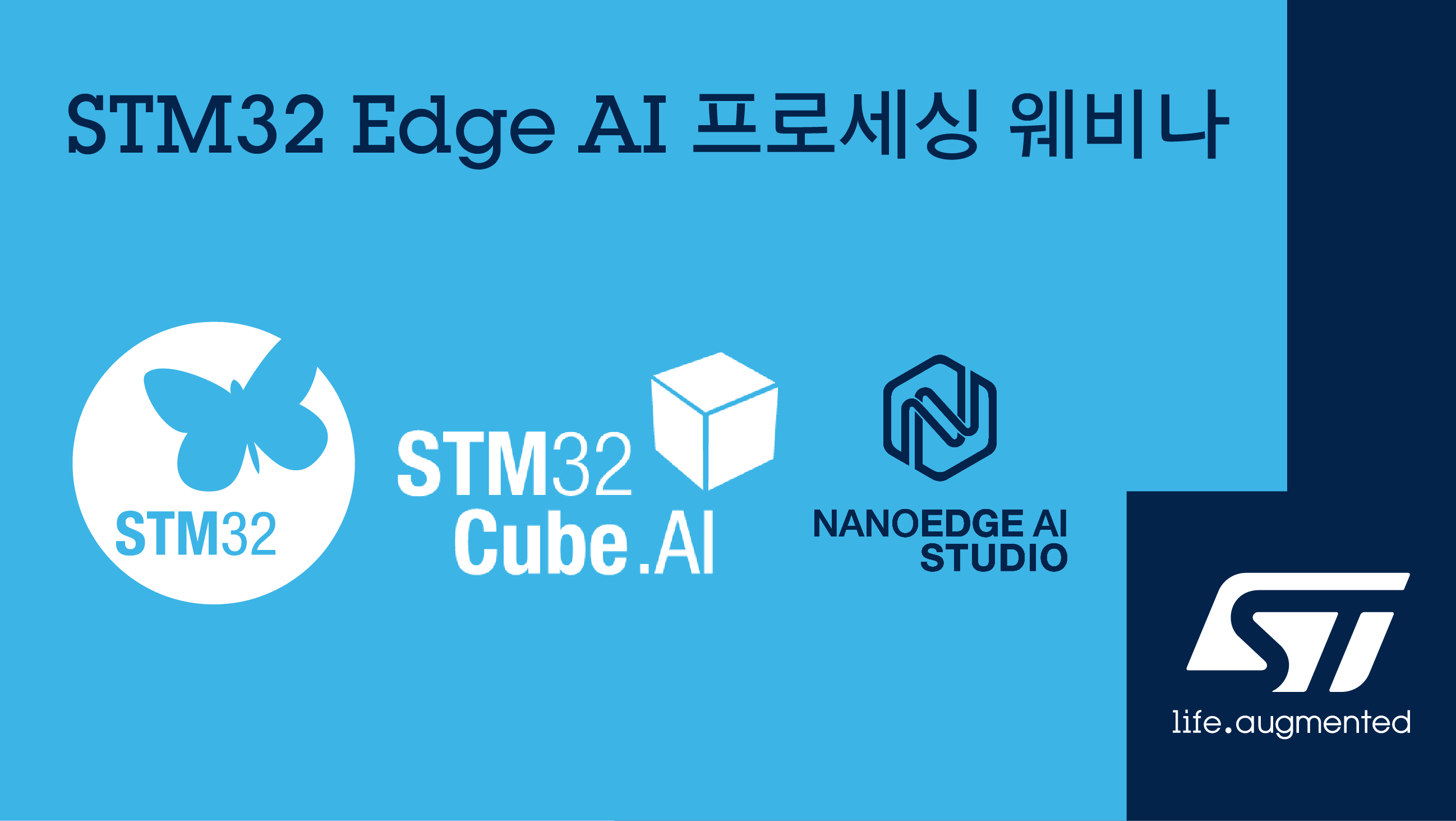

이에 ST는 STM32 MCU 플랫폼에 엣지 AI 프로세싱이 가능하도록 개발자 친화적인 솔루션을 지속 출시·지원하고 있다. △STM32 Cube.AI △나노엣지 AI 스튜디오(NANOEDGE AI STUDIO) 등이 이에 해당한다.

▲STM32 제품군에서 사용 가능한 엣지 AI 툴과 적용 가능한 애플리케이션(이미지:ST, e4ds EEWebinar)

▲STM32 제품군에서 사용 가능한 엣지 AI 툴과 적용 가능한 애플리케이션(이미지:ST, e4ds EEWebinar)

딥러닝 뉴럴 네트워크 기준으로 ST의 AI 개발 환경 하에서 △데이터 준비 △데이터 사이언스 △MCU에 딥러닝 모델 포팅으로 구분된다. ST에서는 앞서 언급된 두 가지 툴 솔루션을 통해 딥러닝 모델을 C코드 형태 모델로 변환할 수 있다.

STM32 Cube.AI는 텐서플로우 라이트, 케라스, 오닉스 등 범용 머신러닝 툴을 사용해 만들어진 AI 모델을 C코드 모델로 변환해주는 기능을 제공한다.

이를 이용하면 △이상감지 △센싱 △오디오 △비전 등을 STM32 MCU에서 구현할 수 있다. 더불어 X-LINUX-AI 소프트웨어를 제공해 STM32 MPU 제품군에서 AI 리눅스 기반 예제들도 제공하고 있다.

또한 AI 모델 최적화 기능도 제공한다. 문 과장은 “STM32 Cube.AI에는 모델 쥬(Model zoo)라는 이름으로 다양한 예제들이 깃허브를 통해 제공된다”며 “모델 쥬는 비전, 오디오, 센싱 등의 예제가 포함돼 있으며 이러한 모델을 그대로 사용하거나 학습용 스크립트 파일을 이용해 학습 데이터를 변경해 새로운 모델을 만들 수도 있다”고 설명했다.

다만 개발자는 텐서 플로우 라이트 및 케라스와 같은 AI 모델을 STM32 Cube.AI와 연계해 사용할 수 있지만 파이토치나 매틀랩, 사이킷런과 같은 모델은 오닉스 포맷으로 변환 후에 사용해야 한다.

STM32 Cube.AI는 △그래픽 옵티마이저 △양자화된 모델 지원(Quantized Model Support) △메모리 옵티마이저를 지원한다.

특히 메모리 사용 효율화를 위해 데이터를 내부·외부 메모리에 구분 저장할 수 있으며, 내부 플래시 사이즈가 작은 디바이스는 AI 모델 파라미터를 외부 메모리에 위치 시킬 수도 있다. 자주 사용하는 파라미터는 S램이나 코어에 가까운 TCM 메모리에 저장해 레이턴시를 줄이는 방법도 활용 가능하다.

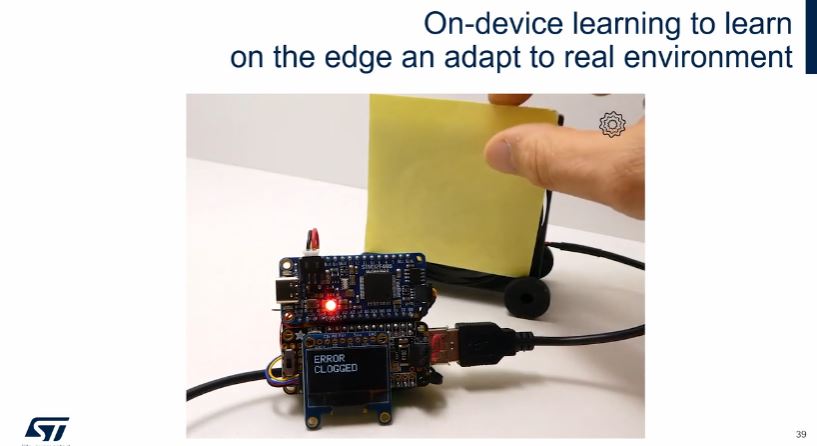

▲나노 엣지 AI 데모 영상의 한 장면

(이미지:ST, e4ds EEWebinar)

나노엣지 AI 스튜디오는 데이터 전처리 및 모델생성, C코드 형태 AI 모델 변환을 자동으로 수행해 AI 모델 경험이 없는 개발자도 손쉽게 AI 모델을 생성할 수 있다는 것이 장점이다.

텐서플로우 라이트, 케라스, 오닉스 등 범용 머신러닝 툴 등 범용 머신러닝 툴에 대한 이해 없이도 개발자가 나노엣지 AI 스튜디오에 학습용 데이터 세트를 제공하는 것만으로도 AI 모델 생성이 가능하다. 이를 통해 개발 시간 단축이 가능할 것으로 기대된다.

문현수 과장은 “ST는 현재 임베디드 시장에서 엣지 AI 프로세싱 수요를 잘 알고 있다”며 “ST MCU 기반 다양한 AI 솔루션과 에코시스템 제공에 많은 노력을 하고 있다”고 말했다.