AMD가 까다로운 AI 애플리케이션을 지원하는 오라클 클라우드 인프라에 AMD 인스팅트 MI300X 가속기가 탑재되며, 파이어워크 AI를 포함한 고객들은 새로운 OCI 컴퓨팅 인스턴스를 활용해 AI 추론 및 트레이닝 워크로드를 강화할 것으로 기대된다.

OCI 슈퍼클러스터 최대 16,384개 AMD 인스팅트 MI300X GPU 지원

AMD가 까다로운 AI 애플리케이션을 지원하는 오라클 클라우드 인프라에 AMD 인스팅트 MI300X 가속기가 탑재되며, 파이어워크 AI를 포함한 고객들은 새로운 OCI 컴퓨팅 인스턴스를 활용해 AI 추론 및 트레이닝 워크로드를 강화할 것으로 기대된다.

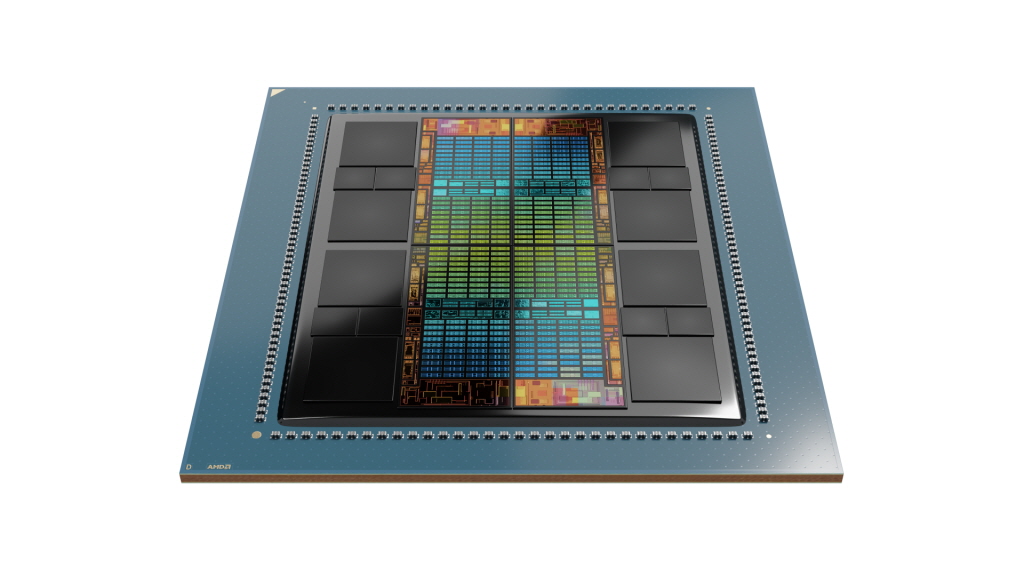

AMD는 오라클 클라우드 인프라스트럭처(OCI)가 자사의 최신 OCI 컴퓨트 슈퍼클러스터 인스턴스인 BM.GPU.MI300X.8의 구동을 위해 ROCm™ 오픈 소프트웨어와 AMD 인스팅트(AMD Instinct™) MI300X 가속기를 채택했다고 27일 밝혔다.

AMD MI300X를 탑재한 OCI 슈퍼클러스터는 수천억 개의 파라미터로 구성 가능한 AI 모델에서 OCI의 다른 가속기와 동일한 초고속 네트워크 패브릭 기술을 활용, 단일 클러스터에서 최대 16,384개의 GPU를 지원한다.

업계 최고 수준의 메모리 용량 및 대역폭을 제공하고, 높은 처리량을 필요로 하는 대규모 언어 모델(LLM) 추론 및 트레이닝을 포함한 까다로운 AI 워크로드 실행이 가능하도록 설계된 OCI 베어 메탈 인스턴스는 이미 파이어워크 AI(Fireworks AI) 등의 기업에서 채택된 바 있다.

AMD 데이터 센터 GPU 비즈니스 기업 부사장 겸 총괄 매니저인 앤드류 디크만(Andrew Dieckmann)은 “AMD 인스팅트 MI300X 및 ROCm 오픈 소프트웨어는 OCI AI 워크로드와 같이 중요도가 높은 분야를 지원하며, 이를 통해 신뢰할 수 있는 솔루션으로서 성장을 이어가고 있다”고 설명했다.

또한 “이러한 솔루션이 성장하는 AI 집약적 시장으로 한층 확장됨에 따라, 해당 조합은 성능과 효율성, 시스템 설계 유연성을 개선해 OCI 고객에게 혜택을 제공할 것”이라고 밝혔다.

오라클 클라우드 인프라스트럭처 소프트웨어 개발 부문 수석 부사장인 도널드 루(Donald Lu)는 “AMD 인스팅트 MI300X 가속기의 추론 기능은 OCI의 광범위한 고성능 베어 메탈 인스턴스에 추가되어 AI 인프라에 일반적으로 사용되는 가상화 컴퓨팅의 오버헤드를 제거한다”며 “AI 워크로드를 가속화하고자 하는 고객에게 합리적인 가격대로 더 많은 선택권을 제공하게 되어 기쁘다”고 말했다.

OCI는 더 큰 배치 사이즈(Batch Size)에서도 지연 시간 최적화 사용 사례 지원할 수 있는 AI 추론 및 트레이닝 능력, 싱글 노드에서 최대 규모의 LLM 모델에 적합한 성능을 제공하는 것에 초점을 맞추고 있다. AMD 인스팅트 MI300X는 이러한 OCI의 기준에 따른 광범위한 테스트를 거쳤으며 AI 모델 개발자들의 주목을 받고 있다.

파이어워크 AI는 생성형 AI를 구축하고 배포할 수 있도록 설계된 빠른 속도의 플랫폼을 제공한다. 100개 이상의 모델을 보유한 파이어워크 AI는 AMD 인스팅트 MI300X이 탑재된 OCI의 성능과 이점을 활용하고 있다.

파이어워크 AI의 CEO인 린 퀴아오(Lin Qiao)는 “파이어워크 AI는 기업들이 광범위한 산업 및 사용 사례에 걸쳐 복합적인 AI 시스템을 구축 및 배포하도록 돕는다”며 “AMD 인스팅트 MI300X 및 ROCm 오픈 소프트웨어에서 사용 가능한 메모리 용량은 고객들이 보유한 모델을 지속적으로 성장시키고, 이를 통해 서비스 확장해 나갈 수 있도록 지원한다”고 말했다.