KIEI 산업교육연구소는 14일 ‘생성 AI 기술을 활용한 영역별 사업모델 세미나’를 개최했다. 세미나에서는 △생성 AI 기술 패러다임 소개 및 활용 연구 △생성 AI 관련 법제도 △영역별 AI 기술 개발 및 사업전략에 대해 다뤘다.

트랜스포머 기반 초거대 AI, 파인튜닝 거쳐 고도화

국내 연구진, 거대 모델 제시해 영역별 특화 전략

저작권 주체·기준·입증책임 법규 마련 논의 지속

AI 기술의 대중화·일상화가 빠르게 전개되고 있는 가운데, 초거대 AI로의 패러다임이 전환됨에 따라 고품질 데이터를 선별 획득하고, 영역별 사업 모델을 마련해 전략을 구상해야 한다는 전문가의 의견이 제시됐다.

KIEI 산업교육연구소는 14일 ‘생성 AI 기술을 활용한 영역별 사업모델 세미나’를 개최했다. 세미나에서는 △생성 AI 기술 패러다임 소개 및 활용 연구 △생성 AI 관련 법제도 △영역별 AI 기술 개발 및 사업전략에 대해 다뤘다.

국내에서는 생성 AI 모델 훈련을 위해 필요한 정보 습득을 위한 연구 및 사업 전략 구상을 위해 지원하고, 저작권 등 윤리 문제와 충돌하지 않기 위한 법 제도 마련에 대한 논의가 활발히 진행되고 있다.

■ 초거대 AI 모델로 어떻게 진화해 왔나

초거대 AI 모델의 강점은 몇 개의 샘플만으로 명령을 했을 경우, 사람의 의도를 파악해 대답을 제시하고, 이를 사람처럼 이해 및 학습할 수 있는 무궁무진한 가능성을 가지고 있다는 것이다.

챗GPT와 같은 생성 AI 모델은 인코더-디코더 구조로 된 트랜스포머 구조를 기반으로 한다. 이러한 모델은 파인 튜닝(Fine Tuning)이라는 데이터를 추가해가며 학습해 파라미터를 정교하게 업데이트 하는 과정을 거친다. 즉, 언어 모델의 학습 패러다임은 사전 학습 모델을 생성 및 최적화해서 사용하는 ‘자기 지도 학습’ 기법으로 변화해 왔다.

‘AI 언어 모델’이란 대용량 텍스트 데이터로부터 자기지도 학습을 이용해 범용적 의미 표현을 사전 학습 및 다양한 응용 업무에 활용하는 언어 모델을 의미한다. 인코더(이해)에서 디코더(생성)에 포커스 된 언어 모델이 개발되기 시작했고, 구글은 ‘버트’라는 최초의 모델을 탄생시켰다.

이후 AI는 급속도로 발전을 거듭한다. 오픈 AI는 기존 디코더의 성능을 극대화시켜 GPT를 만들었다. 오픈 AI가 개발한 챗GPT는 텍스트에 특화된 생성 AI 중 하나로, 인간과 유사한 방식으로 문장, 이미지, 음성 등의 데이터를 생성할 수 있다.

특히 파라미터 수가 10억 개 이상을 의미하는 초거대 AI가 등장한 것은 기념비적인 발자취라고 볼 수 있다. 언어 생성 모델인 GPT-3는 더 깊은 학습을 통해 다음 언어 생성 예측도를 향상시켰다.

챗GPT-4는 논문이 공개되지 않았지만, 세 단계를 거쳐 개발되고 있다고 예측된다. GPT에 명령을 입력 후 답을 하면, 이에 대해 사람이 상호작용한 정보를 파인 튜닝한다. 다음으로 인간의 발화 선호도를 랭킹을 매겨 확인하고, 모델의 확률값과 선호도가 일치하도록 학습 및 강화한다.

초거대 AI 개발 패러다임은 범용 AI에 대한 요구로 바뀌며, 필요 데이터량과 평가 규모도 커지고 있다. 그러나 데이터셋이 방대해지는 것에 비해 질적인 향상이 부족하다는 지적이 이어지고 있어 필터링이 중요시되고 있다. 이제는 대규모 데이터보다 필요한 데이터를 얻는 것이 중요해진다는 설명이다. 한국전자기술연구원(KETI) AI연구센터 신사임 센터장은 “결국 내가 필요한 것들만 잘 꺼내는 방법에 대한 연구에 대해 고민하게 됐다”고 말했다.

국내에서는 연구진과 네이버 등 대기업, 이통 3사 등이 연구를 해오고 있다. 2019년 ETRI의 코버트 모델이 공개되고, 20221년에는 약 두 배 성능이 향상된 KE-T5가 발표됐다. SKT는 에이닷, KT는 믿음, 네이버는 하이퍼클로바 AI 모델을 각각 확대하고 있다.

여기에는 성능 좋은 GPU, 메모리 및 코어, 리소스 등을 요해 점점 많은 비용이 든다는 한계 때문에 대기업 위주로만 개발되고 있다. 신 센터장은 “미국, 중국 등을 비롯해 국내 대기업과 정부도 노력하고 있으나, 고비용으로 인해 경쟁이 어려운 상황”이라고 전했다.

또한 “양질의 데이터셋을 가지고 파인 튜닝하는 것이 핵심이 되고 있으며, 국내 연구진은 초거대 AI 연구를 위한 인프라 마련이 어려운 현 시점에서 중·거대 규모 추론을 할 수 있는 모델을 제시함으로써 영역별 서비스화 할 수 있는 기술들을 발전하는 방향으로 나아가고 있다”고 말했다.

■ 생성 AI 분쟁과 법 제도 현황

AI는 공정하지 않기 때문에 개인 정보를 공개하거나, 가짜뉴스를 생성하거나, 욕 또는 성차별적인 발언을 하기도 한다. 비윤리적인 상황을 인지하지 못하기 때문에 상용화를 위해 더 많은 고민이 필요한 상황이다.

법무법인 민후 양진영 변호사에 따르면, 아직 생성 AI의 권리와 구체적인 법규는 제정 중에 있다. 생성 AI 관련 법 제도는 사람이 중심이 되는 기준으로, △인간 존엄성 원칙 △사회의 공공선 원칙 △기술의 합목적성 원칙을 고려해 제정될 것으로 전망된다. 판례가 부족하고 다수의 이해 관계자들의 의견이 분분해 난항을 겪고 있는 것으로 보인다.

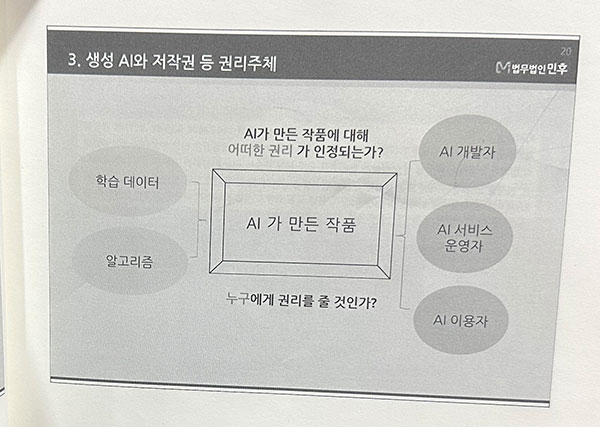

특히 생성 AI 이용 과정에서의 저작권 침해 시 주체, 기준, 입증책임 등에 관한 논의가 지속 필요하다. 이는 크게 저작물을 AI가 만든 작품의 저작권을 누구에게 귀속할 수 있는지와 AI가 저작권자의 사용 허가 없이 저작물을 학습할 수 있는지에 대한 내용이다.

AI 개발자(AI 알고리즘 설계자)-AI 서비스 운영자(AI 소프트웨어를 상품화해 이용자에게 제공하는 자)-AI 이용자 간의 저작권 다툼이 주요 사례다.

예컨대 2022년 카카오브레인은 AI 시아(SIA)가 쓴 시를 모아 시집을 발간했다. AI를 개발한 카카오브레인과 AI 학습데이터를 제공한 미디어아트그룹 슬릿스코프는 공동 소유권을 가지는 형태로 계약했고, 저작권이 아닌 부정경쟁방지법 상 성과가 인정된다. 한편 AI 이용자가 추가적인 창작적 요소를 가미했다면 AI 이용자에게 저작권 인정이 가능하다.

해외에서는 AI가 저작권자의 허가없이 학습 데이터를 학습해 문제를 제기한 사례가 이어지고 있으며, 현재 시점에서는 치열한 공방 중에 있다. 국내에서는 사례가 아직 없지만, 저작권법에 TDM 면책규정 도입을 검토하는 움직임이 일고 있다. TDM 면책규정은 저작물에 AI 학습 데이터로 제공할 의사 여부를 워터마크로 표시하는 방안으로, 입법 논의를 조속히 마쳐야 할 것으로 기대된다.

양 변호사는 “학습 데이터(뉴스 기사, 논문, 대화, 웹페이지 등)를 사용 허가 없이 저작물을 학습할 수 있는지가 가장 문제며, 저작권자들이 부당하다고 주장하면, 공정한 이용 적용이 부당한 상황이다”며, “이미지, 텍스트를 사용할 때 먼저 인식을 가지고 피해야 한다”고 말했다.

한편 이날 세미나에서는 △AI 학습 데이터 영역 △텍스트 영역 △음악 영역 △영상 영역 △이미지 영역에서의 생성 AI 기술을 응용한 사업 모델을 소개했다. 발표에는 씨앤에이아이, 아티피셜소사이어티, 포자랩스, 클레온, 라이언로켓 등이 참여해 챗GPT를 활용해 API화 함으로써 B2B 수익화 사례들이 제시됐다.

.jpg)