범용인공지능(AGI)은 최근 학계와 함께 산업계 전반에서도 주목받으며 다양한 각도에서 논의가 이뤄지고 있다. 인간의 개입 없이도 스스로 추론하고 학습하며 문제를 해결하는 인공지능이야말로 궁극의 인공지능이라고 할 수 있다.

컴퓨터비전학회 패널토론서 AI전문가 5인 비전 공유

“비전 AGI, 센서리모터 로보틱스 플랫폼서 검증 가능”

임바디드 멀티테스킹·멀티 센서·멀티 모달 방향 제시

“범용인공지능(AGI)이 되려면 센싱과 액션이 결합되는 단계까지 가야 한다. 센서리모터 인텔리전스(Sensorimotor Intelligence)를 통해 실제적 문제 해결과 작업 수행해야 한다”

이경무 서울대 전기·정보공학부 교수(컴퓨터비젼 연구실)

“컴퓨터비전에서의 범용인공지능은 평가하기가 쉽지 않으며 이를 표현하는 채널을 구현하는 것이 언어 분야에 비해 훨씬 더 어렵다”

주한별 서울대 컴퓨터공학부 교수(비주얼 컴퓨팅 연구실)

▲한국컴퓨터비전학회 KCCV 2023서 거대모델시대에서 AGI로 가기 위한 컴퓨터 비전의 역할과 발전 방향 패널 토의가 진행 중인 모습

범용인공지능(AGI)은 최근 학계와 함께 산업계 전반에서도 주목받으며 다양한 각도에서 논의가 이뤄지고 있다. 인간의 개입 없이도 스스로 추론하고 학습하며 문제를 해결하는 인공지능이야말로 궁극의 인공지능이라고 할 수 있다.

최근 한국컴퓨터비전학회가 개최한 KCCV 2023 행사에서 ‘거대모델시대에서 범용인공지능으로 가기 위한 컴퓨터비전의 역할과 발전 방향’에 대한 패널 토의를 진행했다.

이날 토의에는 최종현 KCCV2023 프로그램위원장(연세대 교수)이 좌장을 맡고 △이경무 서울대 교수 △최윤재 KAIST 교수 △주한별 서울대 교수 △김종욱 OpenAI 기술스태프 △임화섭 KIST 인공지능연구단 단장이 참여해 깊이 있는 대담이 이뤄졌다.

■ AGI 비전, 평가·검증 난해…”센서리모터 로보틱스서 가능할 것”

.jpg)

▲주한별 서울대 컴퓨터공학부 교수

자연어처리(NLP)에서는 챗GPT와 같은 구체화된 애플리케이션이 등장하며 일부에선 ‘AGI의 가능성을 열었다’, ‘AGI의 출발점에 섰다’라는 긍정적으로 평가를 내리기도 한다. 그러나 챗GPT를 비롯한 여러 언어모델들은 엄밀한 관점에선 아직은 제한적 인공지능(ANI)에 해당하며, 컴퓨터비전 분야에서는 AGI가 무엇인지 묻는다면 더욱 정의 내리기 쉽지 않을 것이다.

주한별 교수는 “AGI는 과업(Task)이 정의되지 않은 상황에서 도메인이 연속적이며, 하나의 문제로 정의할 수 없는 과제를 해결할 수 있는 정도의 지능으로 이해된다”며 좁게 보면 NLP도 AGI의 샘플로 볼 수 있다고 설명했다.

컴퓨터비전에서 AGI의 형태를 상상한다면 이를 구현하기 쉽지 않다고 말하는 주 교수는 특히 언어모델 대비 컴퓨터비전에서의 검증 및 평가가 어려울 것으로 내다봤다. 그는 “비전에서 AGI는 예컨대 동영상을 보여주면 이를 정확히 이해하고 있는지 아닌지를 확인할 방법도 이를 표현할 수단도 마땅히 없다”고 지적했다.

이에 사람이 센싱하는 데이터와 유사한 정보를 비전 AGI에 투입해야 하며, 현재의 산발적인 데이터를 주입해 학습하는 것이 아닌 환경과 데이터 간 연결성이 있는 형태일 때 비로소 AGI에 가까워질 수 있다고 언급했다.

이러한 관점에서 비전 분야 연구원들의 많은 접근들이 이뤄지고 있다. 예로 언급된 시뮬레이터 플랫폼 AI 헤비타트(Habitat)는 임바디드(Embodied) AI 연구를 위한 툴로 3D 시뮬레이터에서 △능동적인 인식 △상호작용 기반 학습 △환경에 기반한 대화 등 체화된(Embodied) AI로의 패러다임 전환을 강화한다.

주 교수는 끝으로 “비전이 로보틱스와 같이 가야한다”며 “AGI를 만든다면 그것을 검증할 수 있는 플랫폼은 인간과 유사한 센서리모터 스킬을 갖고 있는 로보틱스가 될 것 같다”고 덧붙였다.

■ 센서리모터 AI, 컴퓨터비전 기회 多

.jpg)

▲이경무 서울대 전기·정보공학부 교수

이경무 교수는 국내 컴퓨터비전 분야의 전문가이자 세계적인 석학(碩學)으로 AGI의 미래는 ‘센싱’과 ‘액션’이 결합하는 단계로 나아갈 것이라는 의견을 내비쳤다.

그러면서 센서리모터 인텔리전스(Sensorimotor Intelligence), 한국어로는 감각운동 지능이라 말할 수 있는 개념을 언급했다.

이 교수는 “실제 생활에서 액션을 통해 우리가 원하는 서비스 기능 수행이 가능해야 한다”며 “결국은 임바디드 멀티테스킹, 멀티 센서, 멀티 모달(Multi Modal)로의 방향성은 필연적이며, 이 과정에서 비전은 굉장히 핵심적인 역할을 하게 될 것”이라고 강조했다.

인공지능을 연구하고 있는 학자들이나 많은 그룹들이 이미 피직스(Physics) 관련 AI나 센서리모터 AI를 연구하고 있다.

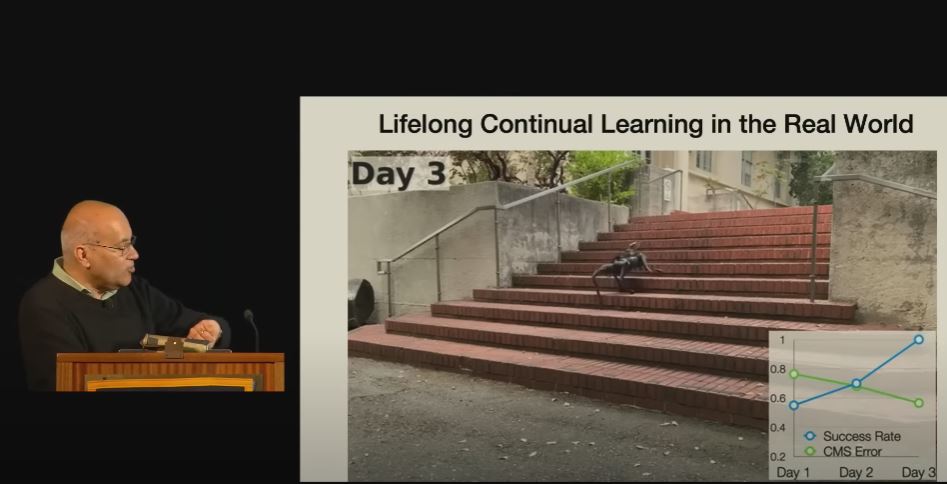

지난 3월 캘리포니아 버클리 대학에서 컴퓨터비전을 연구하고 있는 지텐드라 말릭 교수는 센서리모터와 인공지능을 주제로 강연을 펼쳤는데, 이는 로봇이 장애물과 지형 탐색을 위해 로봇의 고유 감각으로 다리의 깊이 등을 계산하고 1.5초 뒤 깊이를 예측해 이를 비전 시스템에서 이미지로 얻는 것이었다.

▲지텐드라 말릭 교수의 주제 강연 모습(캡처:UC Berkeley Events 유튜브 채널)

그는 “첫째날은 서툴게 움직이며 둘째날은 이전보다 더 많은 계단을 올라가며 마지막에는 로봇이 끝까지 올라가는 것을 보게 된다”고 말했다.

이처럼 로봇 분야에 센서리모터 기반 AI를 적용해 활발한 연구가 진행되고 있는 가운데, 이경무 교수는 “기존의 단편적인 비전 문제를 넘어 실제 삶 속에서 문제를 해결하는 굉장히 복잡한 지능과 관련해 비전 연구자들이 할 수 있는 역할과 기회가 충분히 많을 것이라고 생각한다”고 말했다.

또한, 패널로 참석한 김종욱 박사(OpenAI 기술스태프)는 “궁극적으로 컴퓨터비전도 셀프 슈퍼바이저드(Self-Supervised, 자기지도)가 가미된다면 어린아이들이 세상을 보게 되는 광경과 같은 시각데이터를 통해 추출할 수 있게 되는 것들이 훨씬 더 많아지게 될 것이다”고 전망했다.

.jpg)

.jpg)