대부분의 자율주행차 사고원인이 인지 센서의 한계 성능 및 오류 때문인 것으로 판별됨에 따라 인지 오류 및 악천후 대응이 가능하도록 센서를 고도화하고 센서 융합 기법 적용 등을 통해 한계 상황을 극복하는 것이 중요하다는 전문가의 의견이 제기됐다.

인지 센서, 자율주행 고도화·대중화 위한 핵심기술

열화상 카메라·4D 이미징 레이더·FMCW 라이다 주목

대부분의 자율주행차 사고원인이 인지 센서의 한계 성능 및 오류 때문인 것으로 판별됨에 따라 인지 오류 및 악천후 대응이 가능하도록 센서를 고도화하고 센서 융합 기법 적용 등을 통해 한계 상황을 극복하는 것이 중요하다는 전문가의 의견이 제기됐다.

한국자동차연구원(이하 한자연)은 지난 18일 코엑스 스타트업 브랜치에서 제5회 자산어보 행사를 개최하고 ‘자율주행의 눈’이라 불리는 인지센서의 기술현황 및 전망에 대해 공유했다.

.jpg)

▲나승식 한자연 원장이 제5회 자산어보 행사에서 환영사를 발표하고 있다(사진 제공: 한자연)

나승식 한자연 원장은 “인지센서는 자율주행 고도화 및 대중화를 위한 핵심기술로, 특히 주행 안전성과 신뢰도 확보를 위해서는 카메라, 라이다, 레이다 등의 성능 고도화 및 기술 확보가 매우 중요하다”고 강조했다.

2015년 최초의 양산차 테슬라 모델 X에는 약 20개의 센서가 탑재된 반면 2021년 웨이모 5세대 차량에는 센서 40여개(카메라 29개, 라이다 5개, 레이더 5개)가 탑재되었다.

많은 센서들이 탑재됨에도 자율차 사고는 끊이지 않고 있으며 사망사고까지 발생하는 등 인지 센서인 카메라, 레이더, 라이다의 한계에 부딪혔으며, 고도화할 필요성을 절실히 느끼고 있다.

레이더와 라이다는 2018년 3월 우버의 자율차가 자전거를 끌고 무단횡단하는 사람을 치어 사망에 이른 사고에서 알 수 있듯이 횡방향으로 이동하는 객체에 대한 인식 성능이 다소 떨어진다.

또한 전방 그릴 아래쪽에 탑재되는 레이더의 경우 겨울철 주행 시 표면에 아이싱 현상이 심할 경우 수신감이 떨어지는 문제도 있다.

카메라는 폭우, 폭설, 짙은 안개 등과 같은 환경처럼 광원이 주변에 확보되지 않으면 성능이 떨어진다.

위와 같은 한계를 극복하기 위해 이를 보완할 수 있는 센서와 융합기술의 중요성이 대두되고 있는 것이다.

노형주 한국자동차연구원 자율주행기술연구소 반도체·센서기술부문 연구실장은 “눈에 보이는 가시적인 객체, 보행자 제3 차량 등을 인식하기 위한 센서들을 넘어 현재는 비가시적인 객체, 블랙아이스 등을 인식하기 위한 센서들의 개발이 활발하다”며 “열화상 카메라, 4D 이미징 레이더, FMCW 라이다 등이 개발되고 있으며 이 센서들을 퓨전하는 기술 또한 중요한 분야”라고 전했다.

한화시스템은 위와 같은 인지 센서들의 약점을 극복하고 자율주행에서 제일 중요시되는 안전성을 확보하기 위한 솔루션으로 열화상(열상) 카메라를 제시했다.

.jpg)

▲한화시스템 퀀텀레드 열화상 카메라 성능(그림 출처: 퀀텀레드 홈페이지)

시장조사기관 Yole에 따르면 차량용 열화상 카메라 시장은 2021년 7,000만달러에서 2026년 1억6,000만달러까지 성장할 것으로 전망되고 있다.

열화상 카메라는 수동형(passive)으로 외부 광원을 필요로 하지 않으며 물체에서 방사되는 자체 열에너지를 절대 온도인 0 칼빈 이상의 온도가 되는 것들을 악천후에 관계없이 모두 검출한다.

최용준 한화시스템 부장은 “열상 센서는 생명체, 물체를 구분하는 데 강점을 가지고 있으며 터널 진출입 같은 상황에서도 주간 카메라와 비교해 인식률이 뛰어나며 야간 주행 시에도 보행자를 정확하게 검출해낼 수 있다”고 전했다.

4D 이미징 레이더(4D Imaging RADAR)는 3D 레이더 대비 10배 이상으로 확장성이 좋다고 평가되며 자율주행 시장에서 주목받고 있는 센서다.

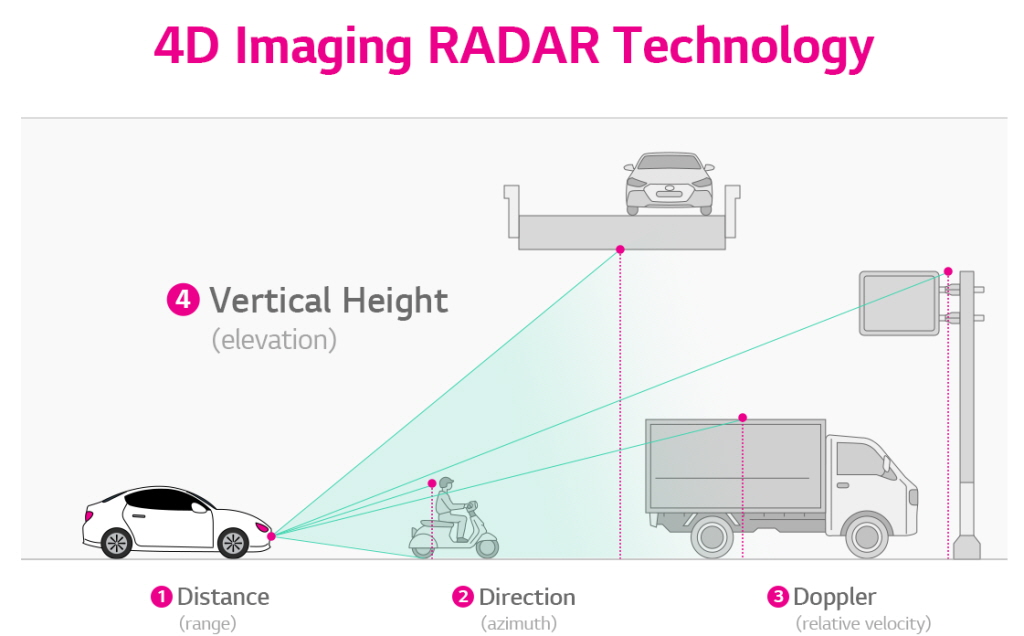

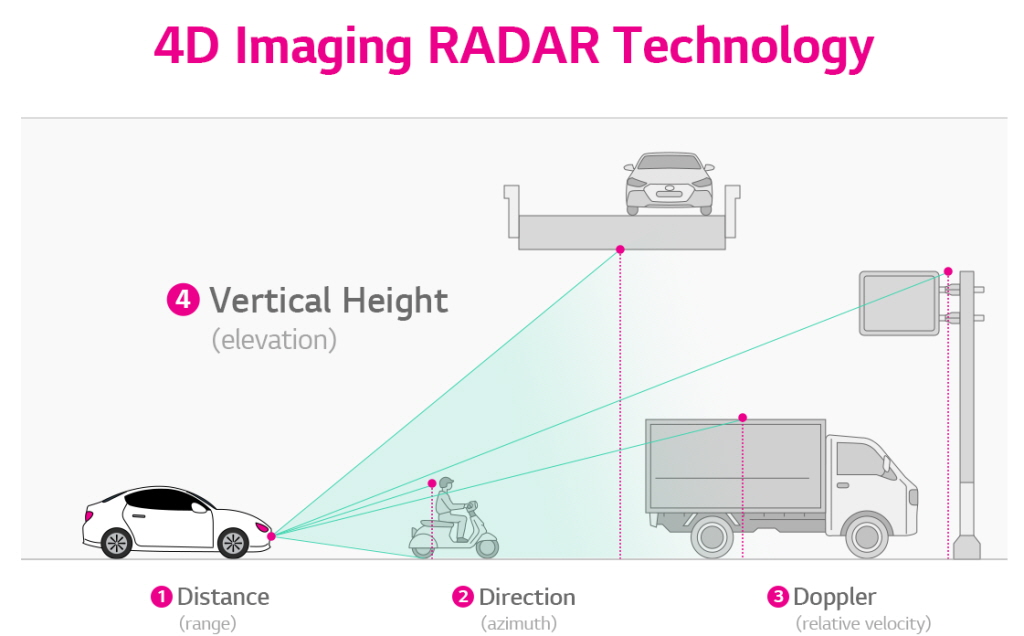

기존 3D 레이더가 거리, 방향(방위각), 상대 속도를 식별했다면, 4D 이미징 레이더 기술은 높이(수직각)라는 또 한 가지 차원의 정보를 추가한다.

▲4D 이미징 레이더 기술 개요(그림 출처: LG이노텍)

따라서 4D 이미징 레이더는 물체의 높이 또는 물체가 도로 위에서 얼마나 떨어진 높이에 위치하는지 식별함으로써 주행 환경에 대해 기존보다 더 풍부하고 정확한 데이터를 제공할 수 있다.

CES 2022에서 4D 이미징 레이더를 전시한 비트센싱은 “속도와 수평 정보만을 감지하던 기존 차량용 레이더는 4D 이미징 레이더를 통해 한 걸음 더 나아가 높이를 감지하고 거기에 속도 데이터까지 더해 주변 환경의 3D 차원을 제공할 수 있게 되었다”며 “거리, 속도, 각도 등 움직이는 물체의 동작에 대한 자세하고 정밀한 감지 정보를 제공할 수 있다”고 이미징 레이더의 장점을 설명했다.

김용재 스마트레이더시스템 부사장은 지난 18일 한국자동차연구원이 주최한 자산어보 행사에서 “현재 레이더의 한계는 타겟의 위, 아래를 구별하기 힘들어 고가도로, 터널 입구 등의 식별에서 어려움이 있다”며 “반면 4D 이미징 레이더는 이러한 어려움을 극복할 수 있으며 자율주행 레벨3에서도 잘 활용될 수 있는 솔루션”이라고 전했다.

FMCW(Frequency Modulated Continuous Wave, 주파수변조연속파) 라이다도 자율주행의 핵심부품으로 떠오르고 있다.

대표적인 우리나라 기업은 인포웍스가 있으며 2019년 국내 최초로 FMCW 라이다 개발에 성공했고 CES에도 참가하며 자사 기술을 세계에 알렸다.

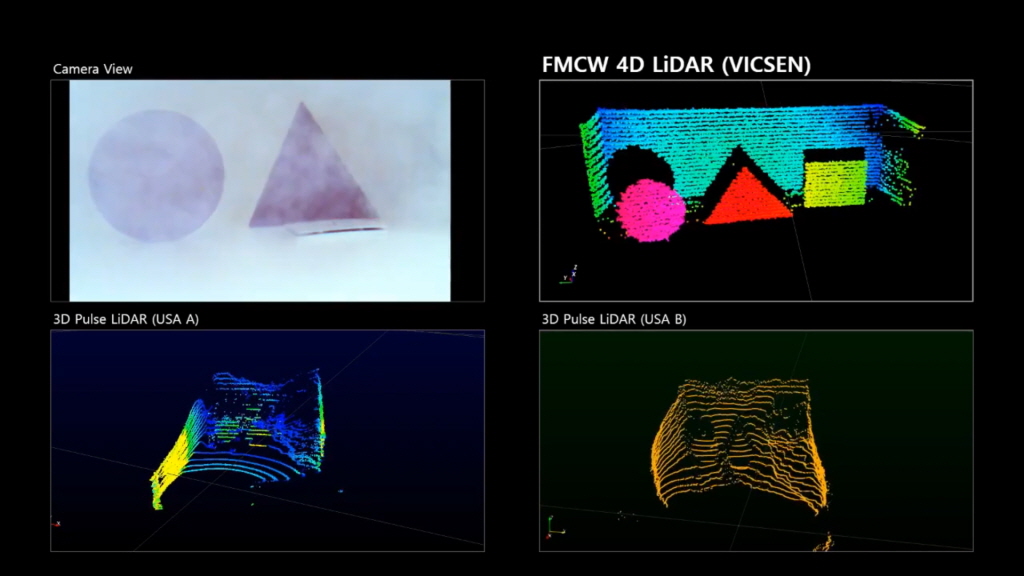

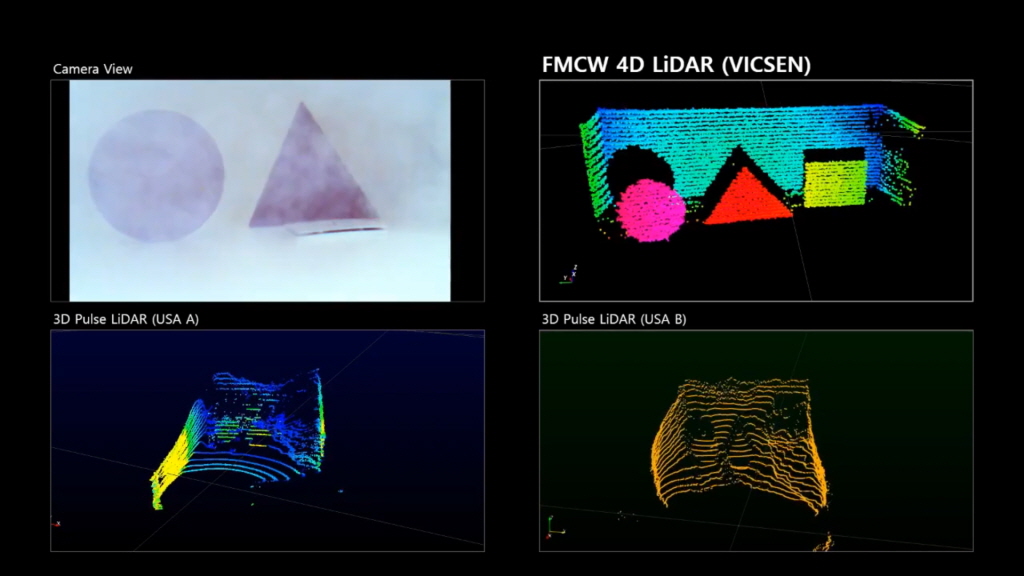

▲FMCW 라이다 선명도 비교(그림 출처: 인포웍스 홈페이지)

기존 라이다는 ToF(Time of Flight) 방식으로 펄스 레이저 광선을 쏘고 물체에 맞고 돌아오는 반사광을 수신하는 데 걸리는 시간을 측정해 방향과 거리를 감지한다.

반면 FMCW 라이다는 물체에서 반사되는 빔의 주파수 변화를 측정해 물체의 이동속도까지 알아낼 수 있다.

태양광, 헤드라이트, 레이저 간섭 극복, 객체 인식 및 추적 기능이 탑재됐으며 기존 라이다 보다 폭우와 같은 악천후 조건과 낮은 조도에서도 인식률을 높여 안전한 자율주행을 할 수 있도록 기존 라이다를 한 층 더 고도화한 것이다.

한편, 한국자동차연구원에 따르면 자율주행차 시장 규모는 2025년 1,549억달러(약 209조원), 2035년 1조 달러(약 1,347조 원)로 연평균 40% 이상 성장할 것으로 보인다.

자율주행 시장 확대에 따라 관련 센서들의 시장도 큰 폭으로 성장할 전망이다.

자율주행 카메라의 시장에 대해 MARKETSANDMARKETS은 2023년 80억달러에서 2028년 139억달러로 연평균 11.7%의 성장률을 보일 것으로 전망했다.

RESEARCHANDMARKETS는 자율주행 레이더 시장에 대해 조사한 결과 2022년 56억6,000만달러에서 2028년 121억2,000만달러로 14.72%의 연평균 성장률을 보일 것으로 내다봤다.

코트라 해외시장뉴스에 따르면 글로벌 차량용 레이저 라이다 시장은 연평균 복합 성장률 76.6%으로 성장하며 2022년 3억6,000만 달러에서 2025년 60억달러, 2027년 110억1,000만 달러로 크게 성장할 것으로 예상된다.

.jpg) ▲나승식 한자연 원장이 제5회 자산어보 행사에서 환영사를 발표하고 있다(사진 제공: 한자연)

▲나승식 한자연 원장이 제5회 자산어보 행사에서 환영사를 발표하고 있다(사진 제공: 한자연).jpg)

▲4D 이미징 레이더 기술 개요(그림 출처: LG이노텍)

▲4D 이미징 레이더 기술 개요(그림 출처: LG이노텍)