LLM을 이용한 엄청난 컴퓨팅 파워를 사용하는 AI를 지원하기 위한 반도체 솔루션에 대해 인피니언 테크놀로지스(Infineon Technologies)의 버나드 버그로부터 들어보는 자리를 마련했다.

“AI, 엄청난 컴퓨팅 파워 뒷받침할 솔루션 必”

LLM, GPU 1천∼4천개 거대클러스터 사용

인피니언, 전력·데이터 전송 등으로 뒷받침

오늘날 인공 지능(AI)이 뜨거운 화두로 떠오르고 많은 논쟁을 불러일으키는 가운데, 인공 지능을 구현한 ChatGPT가 큰 관심과 찬사를 받고 있다.

ChatGPT에 적용된 GPT(generative pre-trained transformer)는 일종의 거대 언어 모델(LLM)로, 엄청난 양의 컴퓨팅 성능을 필요로 한다.

AI와 관련된 컴퓨팅 및 기타 반도체 기술의 선도 공급업체인 인피니언의 관점은 AI에 친숙해지고 AI를 활용하고자 하는 많은 설계자들에게 도움이 될 것이다.

이 글은 AI에 관한 배경 정보와 통찰을 제공하고자 하는 것으로, AI의 시기적 배경, 위험성, 현재 및 미래 전망에 대해서 설명한다.

■ ChatGPT와 LLM에 관한 배경 설명

머신 러닝(ML)은 처음에 특정 분야의 애플리케이션에서 성공을 거두었다.

1997년에 딥 블루 슈퍼컴퓨터가 당시에 체스 세계 챔피언인 카스파로프(Kasparov)를 이겼다.

2010년경에는 질문에 대답하는 컴퓨터 시스템으로 IBM 왓슨이 퀴즈 쇼인 ‘Jeopardy!’에서 우승했다.

시리에 이어서 알렉사가 등장하면서 자연어 처리(NLP) 모델이 인기를 얻기 시작했다. 2015년에는 딥 러닝 모델이 피부암을 진단하고 MRI(자기 공명 영상) 스캔을 판독하는 것과 같은 이미지 분류에 있어서 사람보다 뛰어날 수 있다는 것을 보여주었다.

좀 더 최근에는 자율 주행 차량의 자율 주행 프로그램에 이러한 모델이 적용되고 있다.

이러한 온갖 특정한 용도의 제품들을 개발한 데 이어서 연구자들은 통합으로 눈을 돌리고 모두를 위해서 모든 문제를 풀 수 있는 하나의 단일 모델을 개발하고자 했다.

그러기 위해서 이들 연구자들은, 위키피디아와 서적을 비롯한 웹 상의 모든 정보를 바탕으로 학습을 하는 디바이스를 개발했다.

이것을 거대 언어 모델(LLM)이라고 하며, 가장 잘 알려진 LLM이 OpenAI의 ChatGPT(3.0 및 3.5)이다.

전문가들에 따르면 머지않아 LLM이 사람의 지능을 능가할 것이라고 한다.

현재 30개 이상의 LLM이 나와 있으며, 대표적인 것으로 Google의 PaLM, Meta AI의 LLaMa, OpenAI의 GPT-4를 들 수 있다.

ChatGPT에게 어떤 질문이나 물어볼 수 있는데, 예를 들면 파리 여행 계획을 짜는 것을 물어볼 수 있다.

좀 더 구체적으로는 “파리에서 관광지 다섯 군데 추천해 줘”라고 물어볼 수 있다. 이 질문은 열 군데를 추천하는 것으로 손쉽게 변경할 수 있다. “열 군데 추천해 줘”라고 하면 된다. 그러면 이전 대답을 반복하는 것이 아니라 단지 덧붙이기를 한다. 우리가 평상시에 대화를 할 때처럼 말이다. ChatGPT가 한눈에 결과를 요약해서 보여주므로 이것을 참고해서 여행 계획을 짤 수 있다.

첫 번째 대답에서는 5일 여행을 제안했는데, 사용자가 날짜를 추가하면 그에 따라서 일정을 제안한다. ChatGPT를 처음 사용해 본 사용자는 ChatGPT와 상호작용하기가 얼마나 쉽고 대답이 얼마나 훌륭한지 놀라게 될 것이다. 마치 친구나 동료와 대화하는 것처럼 느껴지기 때문이다.

■ 시기적 배경과 빠른 성장

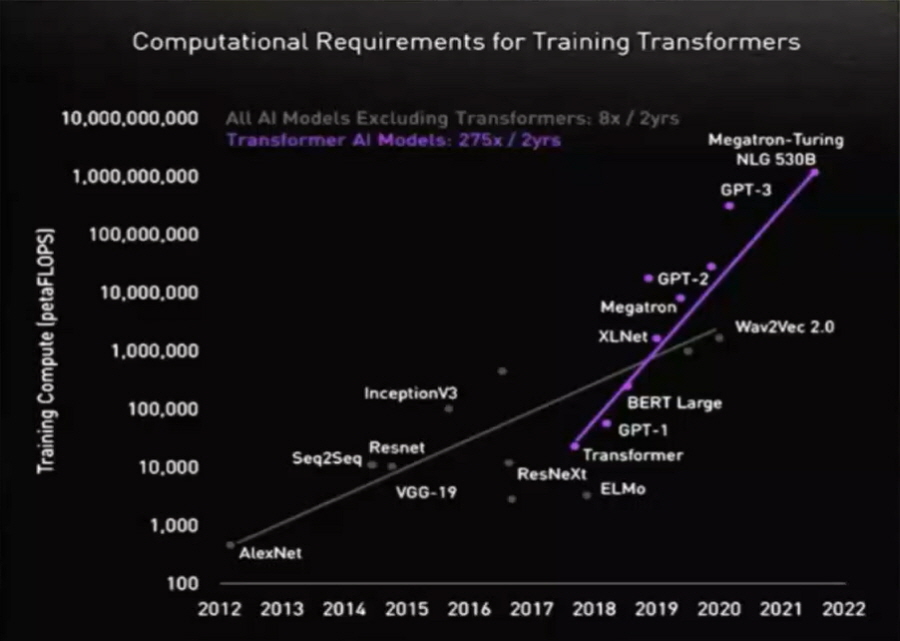

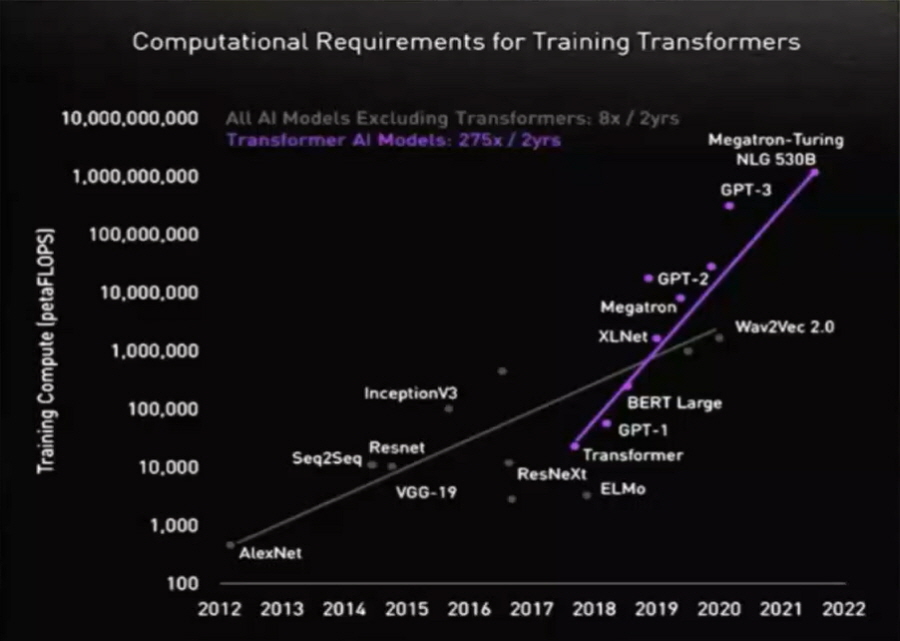

LLM의 타이밍은 광범위한 컴퓨팅 성능의 가용성을 기반으로 한다. 그림 1에서 보듯이, 트랜스포머를 학습시키기 위해서 2017년 이후로 FLOPS(초당 부동 소수점 연산)는 105배 증가했다.

모델 크기는 불과 지난 몇 년 사이에 1,600배 증가했다.

데스크톱 CPU(central processing unit)로 GPT-3로 대답이나 추론을 얻기 위해서는 32시간이 걸리는데, Nvidia A100 클래스 GPU(graphics processing unit)로는 1초밖에 걸리지 않는다.

또한 이러한 GPU 하나로 GPT-3을 학습시키기 위해서는 30년이 걸린다.

그러므로 학습은 통상적으로 1,000∼4,000개 GPU들로 이루어진 거대 클러스터를 사용해서 실시하고, 시간은 2주일에서 적게는 4일밖에 걸리지 않고, 학습 비용은 단일 학습 반복으로 50만달러에서 460만달러에 달한다.

이러한 엄청난 양의 컴퓨팅 자원을 이용할 수 있는 주체들은 매우 제한적이었으며, 이 측면에서 대중화는 상당히 최근의 일이다.

▲그림 1 : 트랜스포머 학습을 위한 컴퓨팅 요구량 추이. (출처: Nvidia)

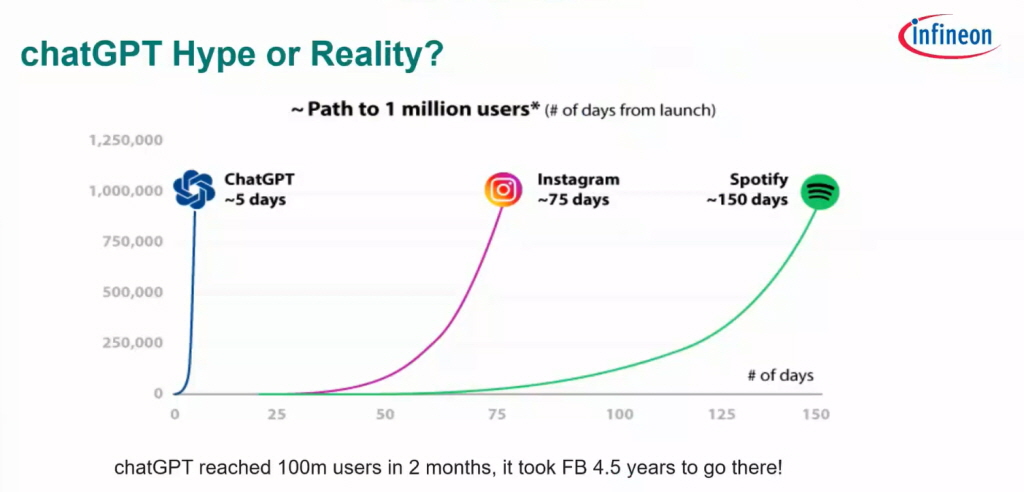

LLM의 중요성은 ChatGPT가 도입되는 속도를 보면 알 수 있다. 그림 2에서 보듯이, 인스타그램이나 스포티파이는 100만 사용자를 달성하기까지 각각 75일과 150일이 걸렸는데, ChatGPT는 이 지점에 도달하기까지 5일밖에 걸리지 않았다. 페이스북이 1억 사용자에 도달하기까지 4.5년이 걸린 것과 비교해서 ChatGPT는 두 달밖에 걸리지 않았다. 이것은 테크놀로지 업계에서 전례 없이 빠른 성장 속도이다.

▲그림 2 : ChatGPT가 시장에서 받아들여지는 속도는 다른 인터넷 기반 툴들과 비교 불가이다.(출처: Google)

■ ChatGPT의 작동 원리

ChatGPT는 확률적 문장 생성기로 볼 수 있다. 다시 말해서 가장 흔한 문장을 추측하도록 구조화된 생성기이다.

문장으로 가장 가능성이 높은 다음 단어를 추측하고, 제공된 정보양에 근거해서 맥락이 바뀔 수 있다.

앞서 말한 것을 바탕으로 해서 다음 단어를 대답(추측)한다.

예를 들어서 GPT3는 다음 단어에 대해서 결정을 내리기 위해서 이전의 2,000단어를 검토한다(약 4페이지).

이에 비해서 GPT3.5는 약 8페이지를 검토하고, GPT4는 약 64페이지를 검토한다. 그럼으로써 다음 단어를 추측하는 능력이 크게 향상되었다. LLM의 프로세스를 간략하게 요약하면 다음과 같다.

▶1단계 : 다양한 주제에 대해서 상세한 정보를 담은 기초 모델을 학습한다.

▶2단계 : LLM 소유자나 누군가가 사전 프롬프트를 제공한다. 이렇게 해서 챗봇의 ‘개성’(친절한, 유용한…)을 정의하고 피해야 할 유해 도메인을 정의한다.

▶3단계 : 세밀한 조정을 통해서 특정한 관점에서 추가적인 입력이나 가치를 제공한다.

▶4단계 : RLHF(인간 피드백을 통한 강화 학습)를 통해서 일부 대답들을 걸러낸다.

답변을 제공하기 위해서, ChatGPT 같은 툴은 사용자가 입력한 것을 포착하고 많은 경우에 애플리케이션 프로그래밍 인터페이스(API)를 호출하여 신속하게 응답한다. API는 대개 라이센싱 수수료를 통해서 다른 서버의 정보에 액세스하고 답변을 제공한다. 이 과정을 추가적으로 반복할 수 있다.

LLM 툴은 사용자들로부터 무조건적인 믿음을 받는 것이 합당하지 않다. LLM 툴을 사용하는 데 따른 위험성이 존재하기 때문이다.

이러한 툴은 많은 질문에 아주 잘 포장된 대답을 제공할 수 있으나, 그 대답이 틀릴 수 있고, 어떤 때는 대단히 잘못될 수 있다. 어떤 필터가 적용되었고 대답이 왜곡되거나 심지어는 오도되었는지 사용자가 알 수 없기 때문이다.

■ AI용으로 시스템 전문성 보유

선도적인 반도체 시스템 솔루션 업체인 인피니언은 더 안전하고 효율적인 자동차, 더 효율적이고 친환경적인 에너지 변환, 연결되고 보안적인 IoT 시스템과 전력 관리, 센싱, 데이터 전송을 비롯한 다양한 분야들에 걸쳐서 필요한 솔루션을 제공한다.

예를 들어 인피니언 전력 시스템은 GPU에 1,000암페어를 공급할 수 있는 DC-DC 솔루션의 일부이다.

뿐만 아니라 메모리, 첨단 자동차 및 기타 애플리케이션을 위한 다양한 시스템온칩(SoC) 솔루션을 제공한다.

인피니언은 최근 에지 디바이스용 머신러닝 솔루션의 선도적인 플랫폼 제공업체인 Imagimob을 인수함으로써 빠르게 성장하는 TinyML(Tiny Machine Learning) 및 AutoML(Automated Machine Learning) 시장에서 입지를 공고히 하고 에지 디바이스에서의 머신 러닝을 위한 포괄적인 개발 플랫폼을 제공하게 되었다.

고객들은 LLM을 포함한 다양한 애플리케이션에서 이러한 경험을 활용할 수 있게 됐다.

■ AI 전망

다소의 논란은 있을지 몰라도, 인공 지능이 이미 자리를 잡고 있으며 앞으로 계속해서 사용이 늘어날 것으로 보인다.

설계자들이 AI에 친숙해지고자 하고 AI를 활용하고자 함에 따라서 인피니언 같은 확실한 시스템 솔루션 회사가 필요하게 되었다.

인피니언은 AI/ML을 비롯한 다양한 분야에서 시장을 선도하는 회사로서, 광범위한 첨단 센서 및 IoT 솔루션 포트폴리오를 통해서 고객들이 제품을 빠르게 시장에 출시할 수 있도록 한다.

※ 저자

버나드 버그, AI 및 데이터 사이언스 디렉터, 인피니언 테크놀로지스(Infineon Technologies)