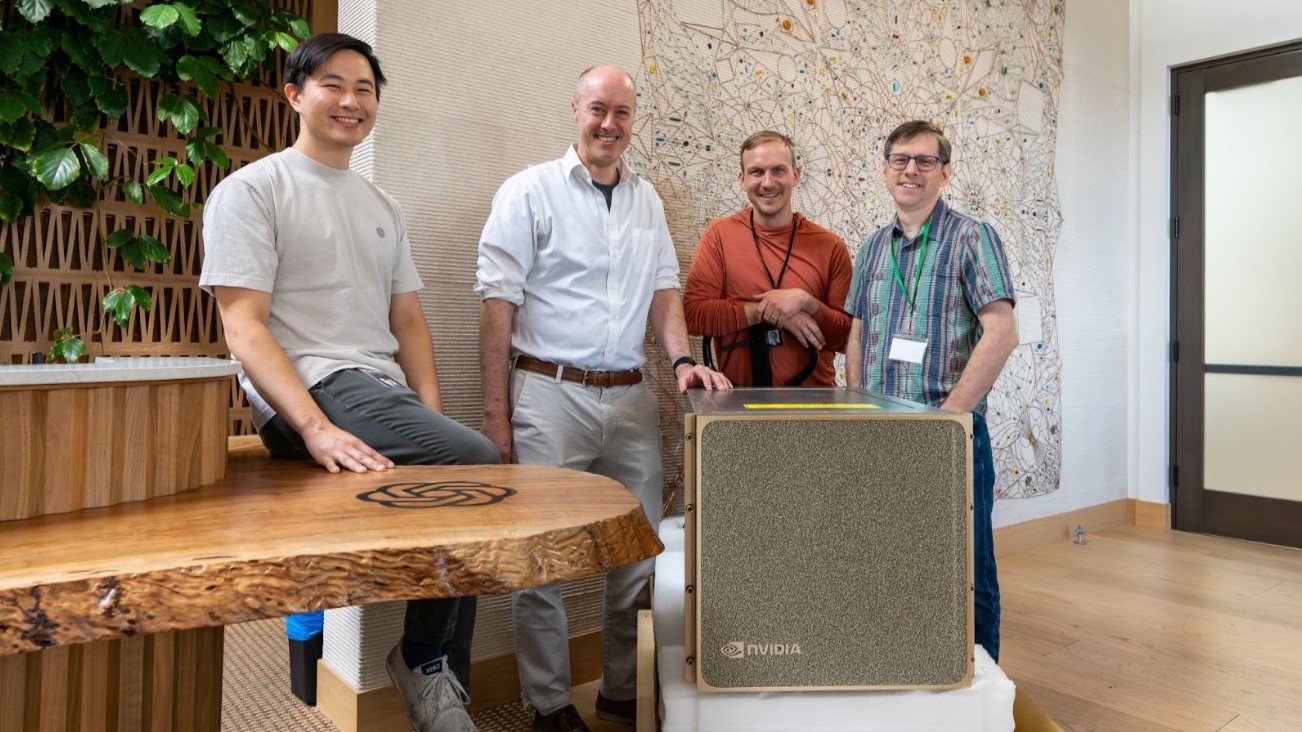

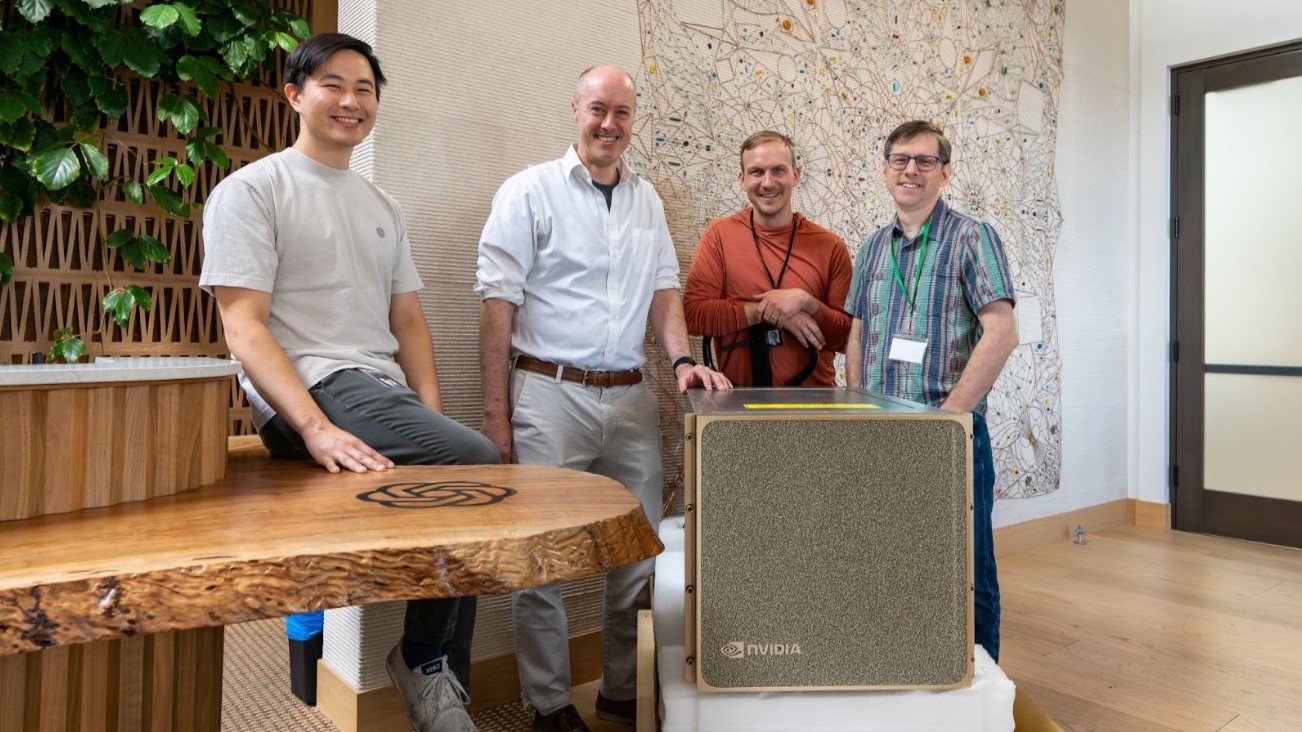

▲엔비디아가 오픈AI에 제공한 DGX B200 / (사진:엔비디아)

나델라 MS CEO, “정교한 AI 워크로드 지원”

오픈AI에 블랙웰 DGX B200 첫 샘플 제공

엔비디아가 마이크로소프트(MS)와 오픈AI 블랙웰(Blackwell) 시스템을 최초로 공급한다고 11일 밝혔다.

엔비디아는 마이크로소프트 애저에서 GB200 기반 AI 서버를 갖춘 엔비디아 블랙웰 시스템의 최초 클라우드 솔루션 제공업체가 됐다고 밝혔다. 마이크로소프트 애저는 인피니밴드 네트워킹과 혁신적인 폐쇄 루프 액체 냉각을 활용해 AI 모델을 구동할 수 있도록 최적화하고 있다고 전했다.

이 같은 소식에 마이크로소프트 CEO인 사티아 나델라(Satya Nadella)는 공식 소셜미디어 X 계정에서 “우리는 엔비디아와의 오랜 파트너십과 심층적인 혁신으로 업계를 선도하며 가장 정교한 AI 워크로드를 지원하고 있다”고 말했다.

GB200 그레이스 블랙웰 슈퍼칩은 엔비디아 GB200 NVL72의 핵심 구성 요소로, GB200 NVL72는 72개의 블랙웰 GPU와 36개의 그레이스 CPU를 연결하는 멀티 노드, 수냉식, 랙 스케일 솔루션이다.

이는 거대 언어 모델(LLM) 워크로드에 최대 30배 빠른 추론을 제공하며, 수조 개의 파라미터 모델을 실시간으로 실행할 수 있는 기능을 제공한다.

또한, 엔비디아는 오픈AI에 블랙웰 DGX B200의 첫 엔지니어링 샘플 중 하나를 제공했다. 오픈AI는 최신 DGX B200 플랫폼을 통한 AI 훈련에 엔비디아 블랙웰 B200 데이터센터 GPU를 활용할 예정이다. 엔비디아는 공식 X 계정읕 통해 오픈AI에 전달한 DGX B200의 모습을 공개했다.

엔비디아 DGX B200 시스템은 AI 모델 훈련, 미세 조정, 추론을 위한 통합 AI 플랫폼으로 5세대 엔비디아 NV링크(NVLink)로 상호 연결된 8개의 블랙웰 GPU를 탑재해 이전 세대인 DGX H100 대비 3배의 훈련 성능과 15배의 추론 성능을 제공한다.

이에 기반해 LLM, 추천 시스템, 챗봇 등 다양한 워크로드에 활용되고 있어 기업의 AI 혁신 가속화를 추동하고 있다.