최근 생성형 AI를 도입해 업무 효율성 제고 및 생산성 확대를 꾀하는 기업이 확대되고 있다. 생성형 AI 모델을 보유하고 있는 기업들은 기업 대상 신규 서비스를 출시하며 적극적으로 성과 창출에 나서고 있다. 기업의 생성형 AI 도입을 위해서는 고품질 데이터의 신뢰성과 전문성이 강조되고 있다.

▲

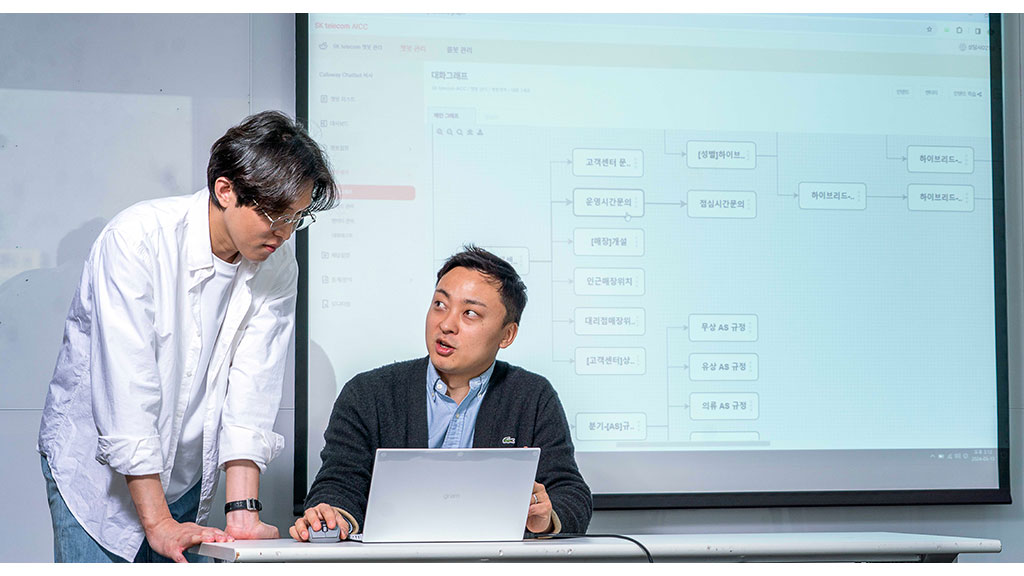

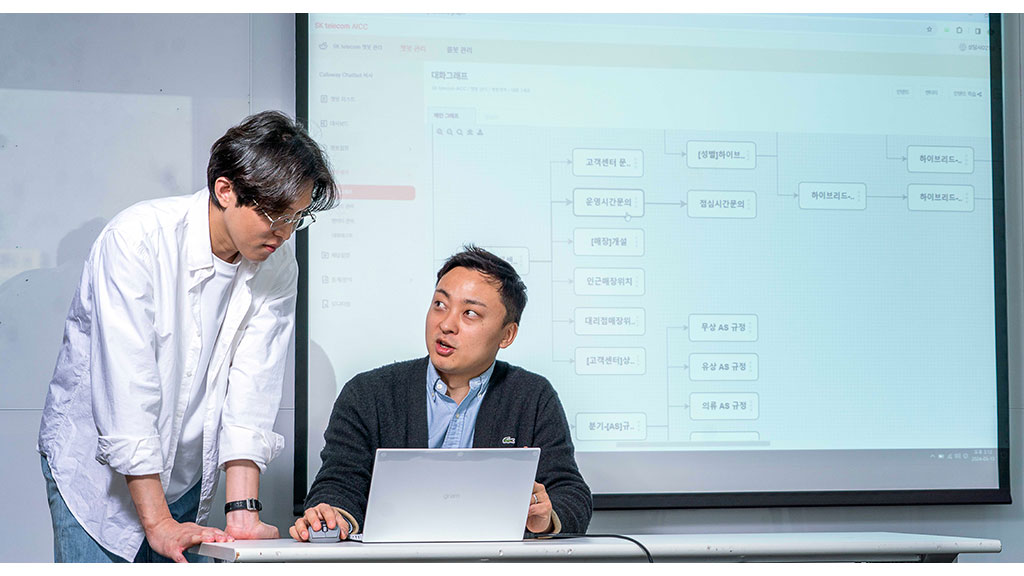

▲SKT 직원들이 AI 컨택센터 서비스 ‘SKT AI CCaaS’를 운영하고 있는 모습(사진=SKT)

SKT, 기업용 LLM 도입…직원 생산성 제고

사피온, LLM 추론 AI 반도체 X330 3분기 양산

기업 생성형 AI 도입…데이터 신뢰성 확보 중요

최근 생성형 AI를 도입해 업무 효율성 제고 및 생산성 확대를 꾀하는 기업이 확대되고 있다. 생성형 AI 모델을 보유하고 있는 기업들은 기업 대상 신규 서비스를 출시하며 적극적으로 성과 창출에 나서고 있다. 기업의 생성형 AI 도입을 위해서는 고품질 데이터의 신뢰성과 전문성이 강조되고 있다.

KIEI 산업교육연구소가 22일 구로동에서 개최한 ‘2024년 AX시대-기업의 대응전략과 AI 신기술 및 혁신사례 세미나’에서 SKT 강향홍 매니저는 SKT의 엔터프라이즈 LLM(거대언어모델) 도입 사례와 고려사항에 대해 발표했다.

SKT는 20일 다양한 B2B 신규 서비스를 출시하고, 기업 고객도 다수 확보하는 등 AI 기반 B2B 사업을 적극 확대하겠다고 밝혔다. 주요 서비스로는 올인원 구독형 AI 컨택센터(AI Contact Center, 이하 AICC) 서비스 ‘SKT AI CCaaS’, 광고문구를 몇 초만에 자동으로 생성하는 ‘AI 카피라이터’를 출시했다.

강 매니저는 “SKT는 고객의 보안 이슈를 해결하고자 온프레미스에서 경량화 및 성능 향상을 위해 약 1조개 이상 토큰으로 학습된 모델 ‘A.X’를 기반으로 기업용 LLM 도입을 확대하고 있다”며, “향후 비용 최소화를 위해 AI 반도체 기업 사피온과 LLM 추론이 가능하도록 개발한 X330이 3분기부터 양산되며, GPU 비용이 반값으로 줄어들 것”이라고 예측했다. 사피온은 지난해 11월 X330을 출시한 바 있으며, AI 추론을 지원하는 X340을 개발 중에 있다.

SKT의 LLM ‘A.X(에이닷엑스)’는 70억개의 파라미터 수를 갖는다. 일반적으로 파라미터 수가 크면 클수록 범용적 성능은 좋아지는 반면, SKT는 기업용으로 특화하기 위해 경량화했다는 설명이다. 예컨대 오픈AI의 챗GPT 3.5는 1750억개, 네이버의 하이퍼클로바는 2040억개, 메타의 라마 2(LLaMA2)는 12억개의 파라미터 수를 가진다. 파라미터 수가 커질수록 성능은 좋아지지만 온프레미스 환경에서 구동이 어렵다.

SKT는 앤트로픽, 오픈 AI, 올가나이즈, 코난테크놀로지 등과의 협업으로 다양한 LLM을 보유해 클라우드형 및 구축형으로 문서작성, 이미지 생성, 검색 등을 지원한다. 특히 AICC는 기존 컨택센터에 AI 기술을 접목해 고객 음성을 텍스트로 변환하는 음성인식 기술, 간단한 요청을 자동 응답하는 AI챗봇·콜봇, 고객 문의 요약 및 답변, 상담 내용 정리 및 분석 등을 수행한다.

금융, 공공, 제조, 유통 등의 분야에서 기업들은 다양한 DB를 보유하고 있는 반면 활용도가 낮은 경우가 대다수다. 이러한 경우, LLM을 적용하면 데이터 검색이 빨라질 수 있다. 또한 LLM은 DB에 축적된 인사이트를 이용하기 위해 쿼리 작업을 지원한다. 강 매니저는 “생성형 AI가 다양한 분야에서 활용되고 있으나, 도입 초기이다 보니 내부 직원에게 적용해 직원 생산성을 제고하고, 그 후 소비자향으로 쓰겠다는 니즈가 많다”고 말했다.

강 매니저는 LLM 도입 시 주요 고려사항으로 환각 현상, 보안, 비용을 제시했다. 챗봇이 마치 진짜인 듯 거짓을 답하는 환각 현상을 극복하기 위해 SKT는 검색 증강 생성(RAG)으로 질의와 유사한 데이터로 문맥을 활용해 글을 생성하게 한다. 또한 온프레미스 환경으로 보안성을 확보하고, 50억~400억 파라미터 수를 갖는 경량화 된 특화 모델을 적용해 비용 문제를 해결한다.

이에 더해 생성형 AI 학습을 위한 엔비디아의 GPU인 ‘H100’ 수주 시 현재 30주 이상이 소요되는 문제와 외산 GPU 독점을 해결하기 위해 국산 AI 반도체 사피온의 NPU를 채택했다.

마지막으로 강 매니저는 도메인 영역의 기업 피드백을 강조하며, “기업 특화 LLM 성능을 90% 이상으로 끌어올리기 위해서는 휴먼 피드백이 가장 중요하다”며, “마치 신입사원을 지속적으로 학습시키듯이 LLM도 장기적으로 고품질의 신뢰성·전문성 있는 데이터를 축적하고 학습시켜야 한다”고 덧붙였다.

▲SKT 직원들이 AI 컨택센터 서비스 ‘SKT AI CCaaS’를 운영하고 있는 모습(사진=SKT)

▲SKT 직원들이 AI 컨택센터 서비스 ‘SKT AI CCaaS’를 운영하고 있는 모습(사진=SKT)