“현재 AI 플랫폼은 모든 것을 충족시킬 수 없다. 오픈 생태계도 아니며 특정 기업에 락인(Lock-In)돼 있다”

인텔이 비전 2024에서 신규 고객과 파트너를 공개하고 AI 전반에 개방형 시스템을 지향하는 AI 전략을 공개했다. 이를 위한 차세대 가우디3 AI 가속기를 발표하며 엔비디아 H200에 버금가는 성능과 포괄적인 기업용 AI 전략을 강조했다.

▲인텔 비전 2024 국내 기자간담회 모습(사진:인텔)

네이버, 가우디2 평가 中...국내 스타트업·대학 협력 강화

가우디3 하반기 출시, H200급 성능·전성비 강점 한목소리

“현재 AI 플랫폼은 모든 것을 충족시킬 수 없다. 오픈 생태계도 아니며 특정 기업에 락인(Lock-In)돼 있다”

인텔이 비전 2024에서 신규 고객과 파트너를 공개하고 AI 전반에 개방형 시스템을 지향하는 AI 전략을 공개했다. 이를 위한 차세대 가우디3 AI 가속기를 발표하며 엔비디아 H200에 버금가는 성능과 포괄적인 기업용 AI 전략을 강조했다.

인텔은 11일 국내 기자간담회를 통해 인텔 비전 2024의 주요 내용 브리핑과 네이버-인텔 간의 협업 등의 내용을 발표했다. 특히 엔디비아 중심의 AI 생태계 취약성을 직격하며 인텔만의 개방형 생태계 비전이 가진 강점을 강조했다.

먼저 인텔 비전 2024에서는 △개방형 생태계 접근 방식을 통해 기업 내 AI의 모든 부문을 다루는 확장 가능한 시스템 전략 △기업 고객의 AI 배포 및 성공사례 △기업용 AI를 발전시키기 위한 개방형 생태계 접근 △인텔 가우디3 AI 가속기 공개 △AI 워크로드용 엣지 플랫폼 및 이더넷 기반 네트워킹 연결 제품 등이 주요하게 소개됐다.

특히 펫 겔싱어와의 키노트에서 네이버 하정우 센터장이 등장해 생성 AI 생태계 확대를 위한 공동 연구소 설립과 가우디2 도입을 위한 성능 평가 등에 인텔과 협업할 것을 밝히기도 했다.

▲인텔 비전 2024 키노트에서 발표하는 하정우 센터장(사진:인텔)

■ 네이버, 가우디2 평가...국내 AI SW 생태계 강화 리딩

네이버클라우드 이동수 박사는 네이버가 거대언어모델(LLM)을 중심으로 주력하는 사업에 대해 가우디2를 평가해보고 이를 통해 국내 대학과 스타트업들과의 생태계 확장을 도모하고 있다.

이 박사는 최근 챗GPT의 등장 이후 LLM이 AI 영역에서 많이 쓰이고 있으며 엔지니어들은 엔비디아의 쿠다를 다루기보다는 어셈블리(기계어) 수준의 더 낮은 수준에서 최적화를 굉장히 많이 하고 있다고 전했다. 이러한 최적화는 메모리 관리 문제로 프로그래밍 난이도가 높으며 하드웨어 지식도 많이 필요해 LLM 소프트웨어 개발은 소수의 영역에 속해 있다.

▲네이버클라우드 이동수 박사가 국내 기자간담회에서 온라인 라이브로 기자들과의 질의응답을 하고 있다.

한편으론 쿠다는 프로그래밍이 굉장히 어려운 편인데 칩이 바뀔 때마다 코드가 달라져 이를 다시 최적화해야 한다. 또한 소수의 개발자들이 쿠다 기반 LLM을 만든다고 한다면 나머지 대다수가 쿠다에 대한 이해 없이 이를 사용하고 있어 오픈 소스 파급력이 있는가에 대해 이 박사는 의문을 제기했다.

LLM SW를 리딩하는 주체는 대학과 스타트업이 많으며 그렇기에 네이버가 국내 대학 및 스타트업과의 생태계 구축에 나서는 이유이기도 하다. 반면 국내 연구실 및 스타트업은 GPU 등 AI 서버 이용이 어려우며 대기업 등에선 LLM SW 개발 역량이 필요한 이해관계가 맞아떨어지는 것이다.

최근 유행하고 있는 vLLM을 만든 곳은 UC 버클리이며 오픈소스 프로젝트로 알려져 있으며, 미국의 허깅 페이스, 투게더 AI 등이 LLM에 필요한 SW를 직접 개발하고 있는 것으로 전해졌다.

네이버는 국내 스타트업 및 유수 대학 등과의 협업 관련 조율이 마무리 단계에 있다고 밝히며 조만간 조율이 마무리 되는대로 공개할 예정이라고 말했다. 평가 중인 가우디2를 활용해 AI SW 오픈소스 커뮤니티 강화 행보에 인텔과 네이버가 나서고 있는 상황이다.

■ 차세대 가우디3, 엔비디아 H200 동급...전성비 강점

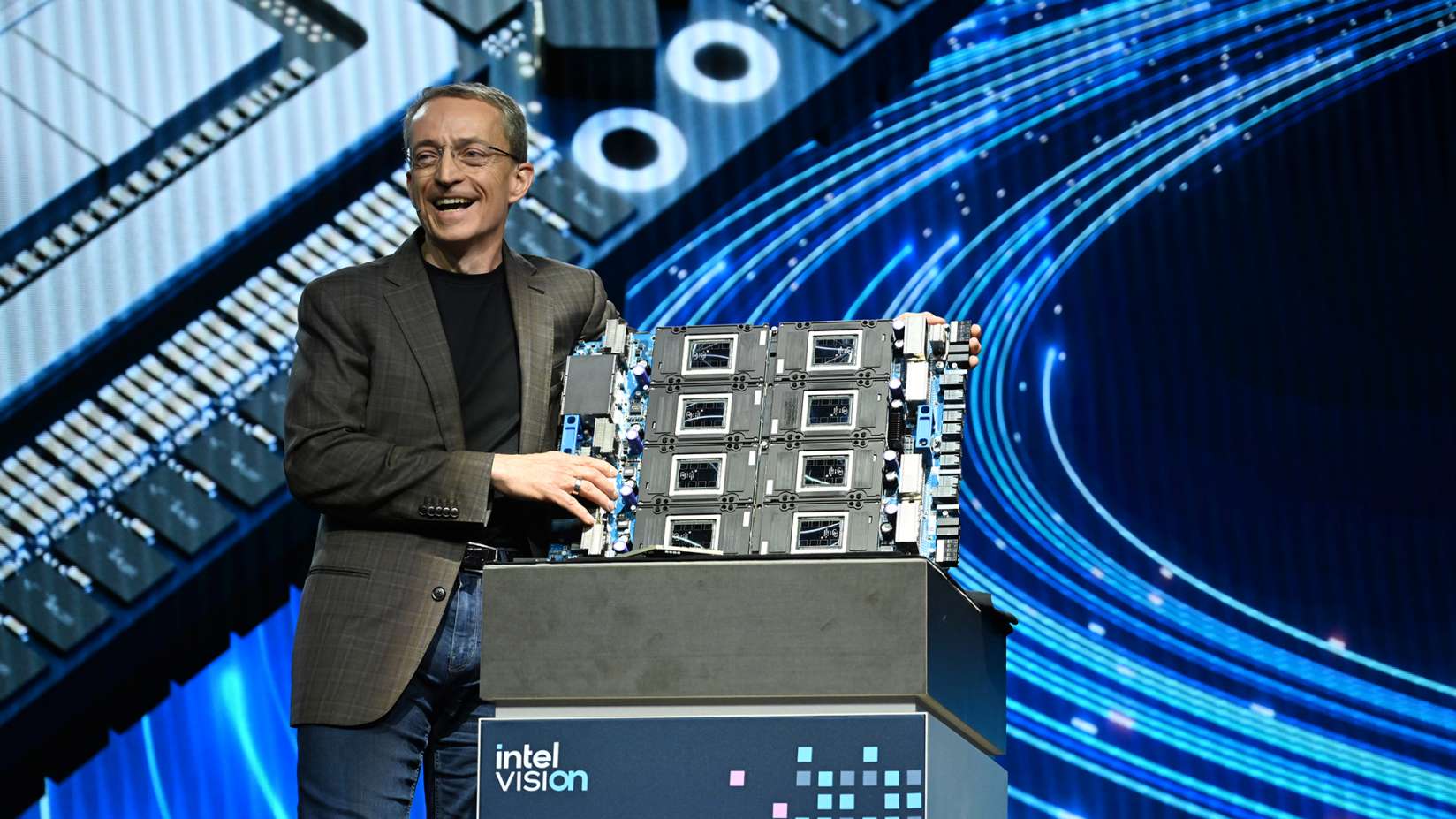

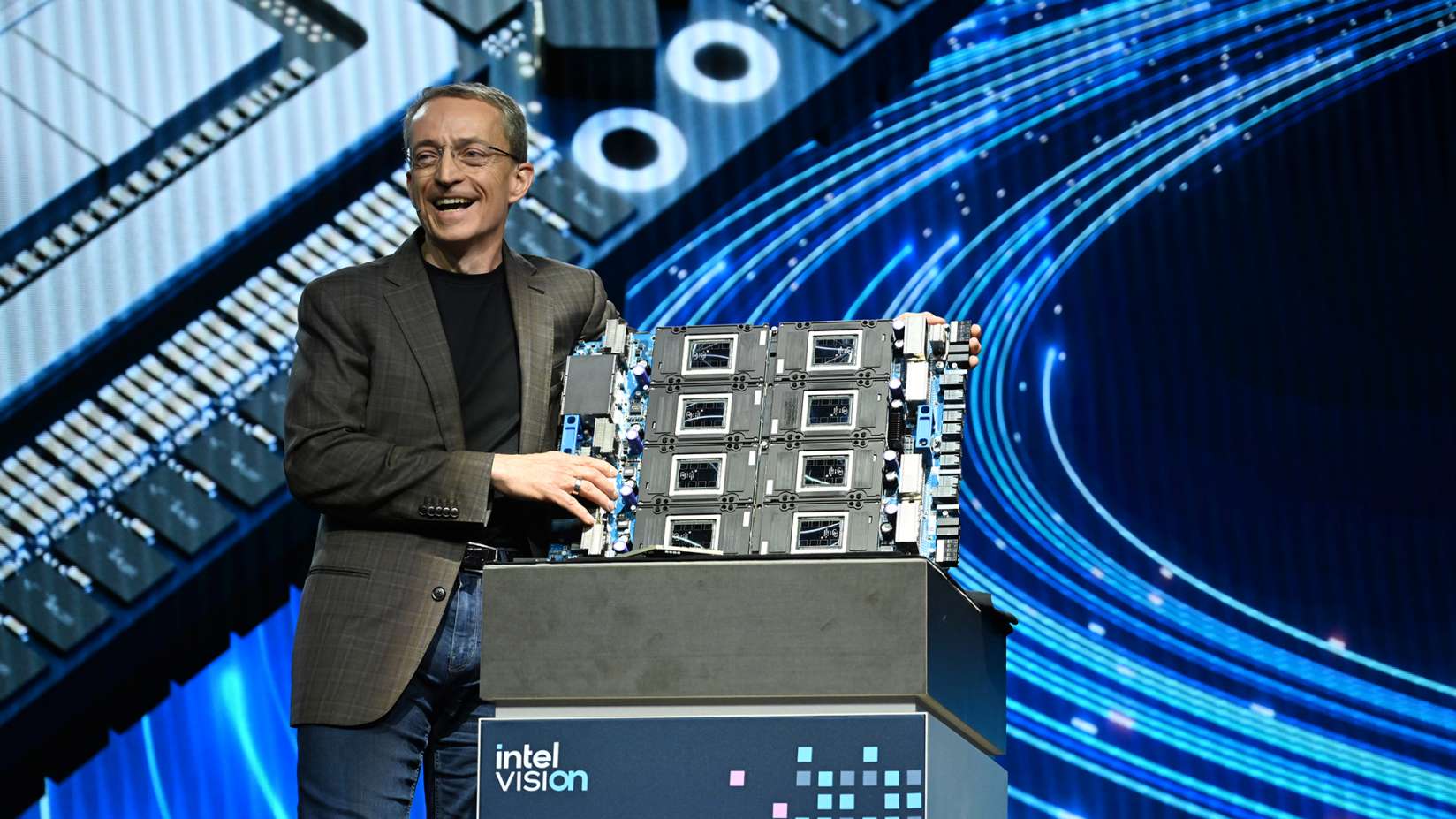

▲현지시간으로 지난 9일 펫 겔싱어가 인텔 비전에서 가우디3 AI 가속기를 공개했다. / (사진:인텔)

네이버는 인텔 가우디2에서의 전성비를 높이 샀다. 가우디3 또한 지난 세대 제품과의 궤를 잇는 제품으로 더 높은 성능에 전성비를 강조하며 하반기 시장 출시를 예고했다.

인텔 가우디3는 엔비디아 H100 대비 50% 높은 추론성능, 40% 높은 전력 효율성을 갖춘 AI 가속기이다. 특정 벤더에 락인되지 않는 오픈 생태계와 개방형 표준 인터커넥터가 특장점인 제품이다.

가우디3는 △5나노 공정 기반 △64 텐서 프로세서 코어 △8 MME(Matrix Multiplication Engine)으로 6만4,000개 병렬연산 수행 △128GB HBMe2 메모리 용량 △3.7TB 메모리 대역폭 △96MB SRAM △24개 200Gb 이더넷 포트 △파이토치 프레임워크 통합 △PCIe 등의 스펙을 보유했다.

인텔이 공개한 자료에 따르면 가우디3는 엔비디아 H200가 비교해 라마-7B 및 70B 부문에서 대등하거나 약간 모자란 추론 성적을 나타냈으며 팔콘 180B 부문에서는 비교 우위의 추론 성능을 기록한 것으로 나타났다.

H100과 비교해서는 라마2 모델과 GPT-3모델에서 평균 50% 추론 처리량이 높았으며 라마와 팔콘 모델에서 평균 40% 더 우수한 성능을 나타낸 것으로 발표했다.

나승주 인텔 상무는 “2025년까지 1억개 AI 가속기 출하를 목표로 한다”며 “가우디3 가속기는 AI 및 HPC를 위한 인텔 차세대 그래픽 카드(GPU)인 팔콘 쇼어(Falcon Shore)의 기반이 될 것”이라고 설명했다.