최근 챗GPT와 같은 대규모 기계학습 기반 서비스가 널리 활용되고 있다. 최신 기계학습 기반 서비스 운영 기업들은 더 높은 성능을 달성하기 위해 모델과 데이터의 크기를 경쟁적으로 증가시키면서, 데이터센터에서 요구하는 메모리 용량 또한 급격히 증가했다. 이에 빅테크 기업들은 메모리 연결 기술인 CXL를 통한 해법을 모색하고 있다.

▲파네시아 정명수 대표의 반도체공학회 행사 키노트 장면 / (사진:파네시아)

CXL 핵심 제품군 로드맵 및 개발현황 발표

대규모 AI 가속용 CXL 기반 데이터센터 제시

최근 챗GPT와 같은 대규모 기계학습 기반 서비스가 널리 활용되고 있다. 최신 기계학습 기반 서비스 운영 기업들은 더 높은 성능을 달성하기 위해 모델과 데이터의 크기를 경쟁적으로 증가시키면서, 데이터센터에서 요구하는 메모리 용량 또한 급격히 증가했다. 이에 빅테크 기업들은 메모리 연결 기술인 CXL를 통한 해법을 모색하고 있다.

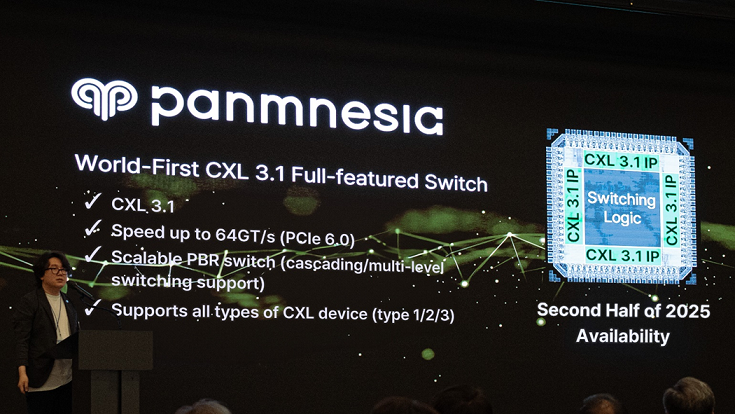

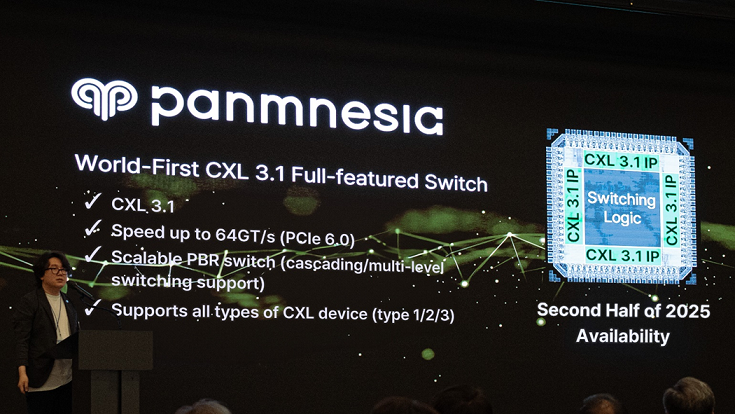

CXL 반도체 팹리스 스타트업 파네시아가 최근 반도체공학회 메인 키노트에서 CXL 핵심 제품군의 개발현황 및 로드맵을 발표했다. 이곳에서 파네시아는 CXL 3.1 스위치 SoC 칩을 생산해 내년 하반기 고객사에 제공할 계획이라고 밝혀 주목을 받았다.

CXL 3.1 스위치 SoC 칩은 순수 국산 기술력으로 개발한 설계자산(IP) 기반으로 LLM과 대규모 AI 응용에 적합한 차세대 데이터센터 구조 설계에 활용될 것으로 기대된다.

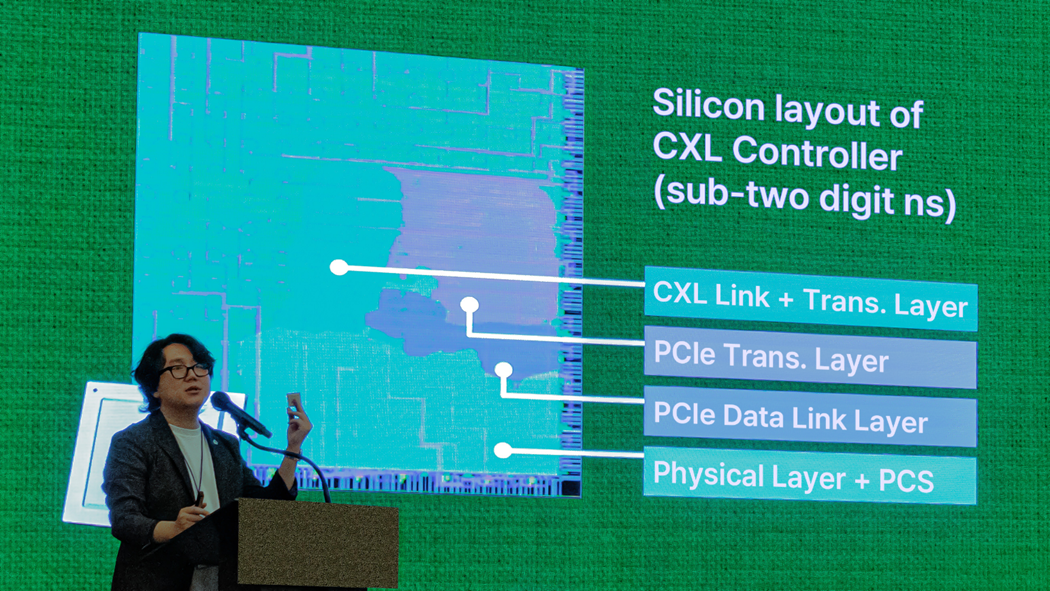

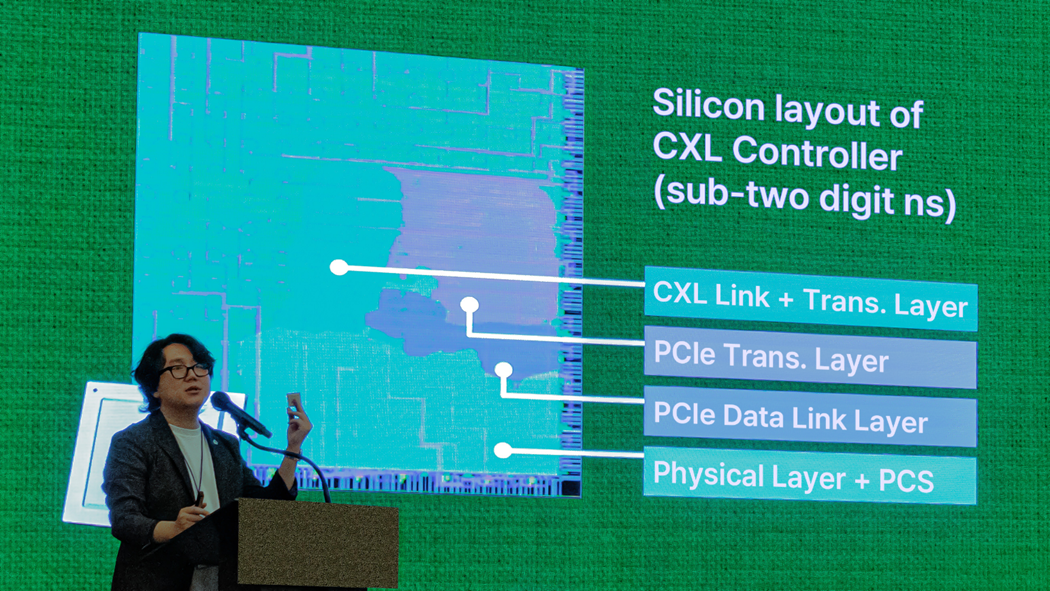

CXL을 활용하면 통합된 메모리 공간을 구성할 수 있기에 비용 효율적인 메모리 확장이 가능하다. 확장된 메모리 자원을 관리하는 일련의 동작을 CXL 컨트롤러에서 하드웨어 가속된 형태로 수행하기 때문에 성능 측면에서도 합리적이라는 것이 파네시아측의 설명이다.

▲파네시아 정명수 대표가 실리콘 공정을 마친 CXL 3.1 컨트롤러 칩의 실물을 공개하는 모습

/ (사진:파네시아)

파네시아 정명수 대표는 반도체공학회 메인 키노트 발표에서 파네시아의 CXL 핵심 제품군인 CXL 스위치 SoC와 CXL IP 관련 개발현황과 로드맵을 발표했다.

최신 표준인 CXL 3.1 에 정의된 기능들을 모두 지원하며, 동시에 이전 표준을 지원하는 장치와도 문제 없이 상호 동작하도록 설계됐다. 특히, 여러 대의 스위치를 다수의 계층으로 연결하거나, 섬유와 같은 구조(패브릭 구조)를 구성함으로써 여러 개의 서버에 장착된 수백대 이상의 장치들을 연결하는 것이 가능하다는 고확장성이 특장점인 것으로 전해졌다.

△CXL.mem △CXL.cache △CXL.io 까지 모든 CXL 프로토콜의 IP를 확보해뒀기에 △메모리 △AI 가속기 △GPU 등 스위치에 연결할 수 있는 장치 종류가 특정 타입에 제한되지 않는다는 범용성 또한 파네시아 스위치의 차별점이라고 설명했다.

이러한 특징으로 인해 파네시아의 CXL 스위치는 실제 기업 데이터센터에 장착된 다양·다수의 장치들을 연결하는 데 활용되며, 이에 챗GPT와 같은 대규모 AI 응용을 가속하는 차세대 데이터센터 구조에서 채택 가능성을 보이고 있다.

최근 마이크로소프트 등 데이터센터 운영기업 및 하이퍼스케일러들이 운영하는 기계학습 서비스는 특성이 서로 다른 여러 종류의 모델 및 연산을 복합적으로 활용하는데, 각 모델·연산을 처리하기에 최적화된 장치 구성이 서로 다르다.

예를 들어, 최신 버전의 챗GPT는 정확한 정보 처리를 위해 LLM과 벡터 데이터베이스(Vector DB)를 동시에 활용하는데, LLM을 효율적으로 실행하기 위해서는 다수의 GPU가 필요한 반면, 대용량의 벡터 데이터베이스를 저장하기 위해서는 다수의 메모리·저장장치가 필요하다.

따라서, 광범위한 모델·연산들의 니즈를 모두 만족시키는 완벽한 하나의 서버 형태를 구성하는 것은 불가능하다.

정 대표는 “파네시아가 제안하는 것은 GPU 로 구성된 서버, 메모리로 구성된 서버처럼 특정 시스템 장치를 한곳에 연결한 서버를 각각 구성하고, 이 서버들을 CXL로 연결해 통합된 시스템을 구성하는 것”이라면서 “이후 각각의 시스템 장치 풀에서 잘 처리할 수 있는 응용을 전담해 수행하도록 하면, 다양한 모델·연산을 효율적인 형태로 처리하는 것이 가능해진다”고 설명했다.

그는 “이를 위해서는 CXL 스위치가 다양한 종류의 장치를 연결할 수 있어야 하고, 여러 서버를 하나의 시스템으로 연결할 수 있어야 하는데, 범용적이며 고확장성을 지원하는 파네시아의 스위치가 여기에 적합하게 활용될 수 있다”고 덧붙였다.

CXL 솔루션은 AI 데이터센터와 같은 차세대 데이터센터 구축에 활용될 수 있는 기술로써 시장 채택에 대한 귀추가 주목된다.