AI 상용화가 널리 확대되며 AI 서비스의 정확도가 기업의 매출에 직접적인 영향을 끼치고 있다. 빅테크 기업들은 최근 더 많은 양의 데이터와 더 큰 모델을 활용해 AI 서비스의 정확도를 높이는 과정에 메모리 채택이 폭발적으로 증가하고, 이로 인해 부가적인 서버와 부품 구매 비용 등이 추가되며 과도한 지출이 발생하고 있다.

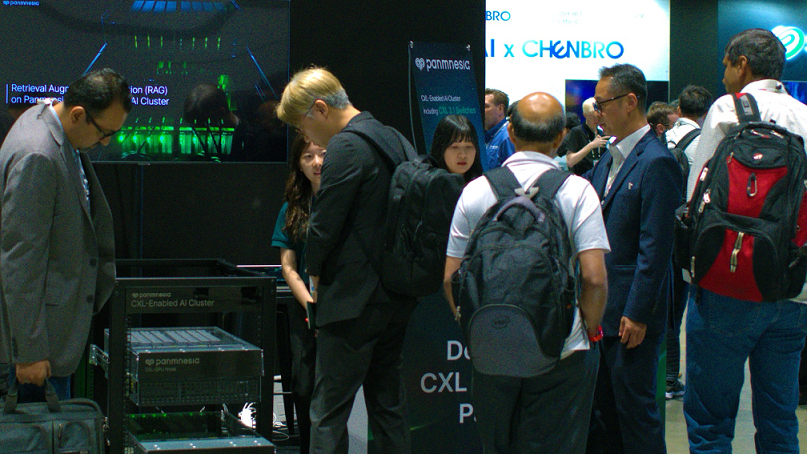

▲미국 캘리포니아에서 개최된 OCP Global Summit에서 파네시아 부스를 관람객들이 방문해 전시제품들을 살펴보는 모습 / (사진:파네시아)

파네시아, CXL 탑재 AI 클러스터 대공개

OCP 글로벌 서밋 참가, 구글·MS 등 관심

CXL 3.1 스위치 칩 내년 하반기 공급 예정

AI 상용화가 널리 확대되며 AI 서비스의 정확도가 기업의 매출에 직접적인 영향을 끼치고 있다. 빅테크 기업들은 최근 더 많은 양의 데이터와 더 큰 모델을 활용해 AI 서비스의 정확도를 높이는 과정에 메모리 채택이 폭발적으로 증가하고, 이로 인해 부가적인 서버와 부품 구매 비용 등이 추가되며 과도한 지출이 발생하고 있다.

국내 팹리스 스타트업 파네시아가 지난 15일 미국 캘리포니아주에서 개최된 세계 최대규모 데이터센터 인프라 행사인 OCP Global Summit에서 CXL 3.1 스위치가 포함된 AI 클러스터를 세계 최초로 공개했다고 18일 밝혔다.

OCP Global Summit에서는 이러한 기존 데이터센터의 비용 효율적인 문제를 해결하는 방법 등 이상적인 데이터센터 인프라 구축 방안에 대한 논의가 이루어진다. 올해에는 다수의 글로벌 기업을 포함해 7,000명 이상의 관계자가 모여 AI향 솔루션에 대해 중점적으로 논의를 진행했다.

파네시아는 이날 차세대 인터페이스 기술인 CXL(Compute Express Link 컴퓨트익스프레스링크)을 활용해 AI 데이터센터의 비용 효율을 획기적으로 개선하는 솔루션 CXL 탑재 AI 클러스터를 선보여 많은 관심을 받았다.

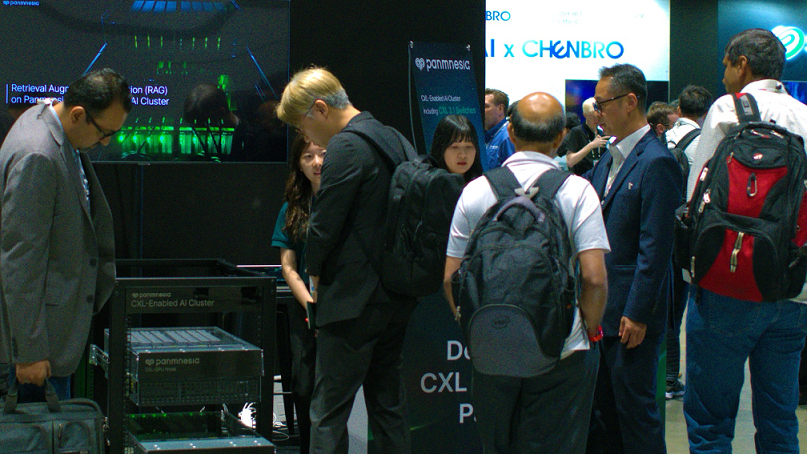

▲파네시아 부스 모습 / (사진:파네시아)

파네시아의 CXL 탑재 AI 클러스터는 파네시아의 주요 제품인 CXL 3.1 스위치와 CXL 3.1 IP를 활용해 구축한 프레임워크로, 대규모 데이터를 저장하는 CXL-메모리 노드와 기계학습 연산을 빠른 속도로 처리하는 CXL-GPU 노드가CXL 3.1 스위치를 통해 연결되는 형태이다.

메모리를 확장하고 싶다면 오직 메모리와 메모리 확장을 위한 CXL 장치들만 추가로 장착해주면 된다. 이에 기타 서버 부품 구매에 불필요한 지출을 하지 않아도 되어 메모리 확장 비용을 절감할 수 있다.

파네시아는 이번 전시회 기간 동안 자사 AI 클러스터 상에서 LLM 기반 최신 응용인 RAG(Retrieval-Augmented Generation)를 가속하는 데모를 선보였다. 관계자의 설명에 따르면, 파네시아의 CXL 탑재 AI 클러스터를 활용할 경우 기존의 스토리지/RDMA 기반 시스템 대비 추론 지연시간을 약 6배 이상 단축시킬 수 있다고 설명했다.

파네시아 관계자는 “구글, 마이크로소프트, 슈퍼마이크로, 기가바이트 등 OCP Global Summit 행사에 참석한 데이터센터 관련 글로벌 기업들이 많은 관심을 표했다”면서 “특히 서버를 제공하는 다수의 기업들이 내년 하반기 고객사에게 제공예정인 파네시아의 CXL 3.1 스위치 칩을 본인들의 서버제품에도 도입하길 희망한다는 의사를 밝혔다”고 설명했다.

CXL 3.1 표준의 고확장성 관련 기능 및 CPU, GPU, 메모리 확장장치 등 CXL 장치에 대한 연결을 지원하는 파네시아의 CXL 스위치를 통해 수 백대 이상의 다양한 장치를 하나의 시스템으로 연결하는, 실용적인 데이터센터 수준의 메모리 확장이 가능하다. CXL 3.1 스위치는 내년 하반기에 고객사들에게 제공될 예정이다.

이어 “엔비디아, AMD 등은 파네시아의 CXL 3.1 IP를 활용해 GPU 장치에 CXL을 활성화할 수 있다는 사실에 관심을 보였다”며, “해당 기업들의 가속기 제품에 파네시아의 CXL IP가 탑재되는 미래를 기대해본다”고 전했다.

한편, 파네시아는 OCP Future Technology Symposium에서는 CXL을 GPU에 탑재하기 위한 설계 기술에 대해 발표했으며, Memory Fabric Forum발표에도 연사로 초청돼 전세계 전문가들에게 AI를 가속하기 위한 최신 CXL 기술에 대해 소개했다.