최근 엔비디아가 새로운 GB200 그레이스 블랙웰 슈퍼칩을 엔비디아 GTC 2024에서 공개했다. 엔비디아 최초의 칩렛 구조 GPU인 블랙웰 GPU를 선보임과 동시에 무지막지한 성능·효율의 AI 슈퍼칩이 등장하며 시장을 압도했다. 사실상 에너지 전쟁 중이나 다름없는 데이터센터 및 하이퍼스케일러 간 AI 서버 시장에서 엔비디아가 강력한 폭탄을 터트린 셈이다.

▲엔비디아 블랙웰 GPU 200(사진:엔비디아)

블랙웰 GPU, 성능 대비 전력효율로 시장 충격

전력효율 H100 8,000개 = GB200 2,000개 꼴

애플 팀 쿡 CEO 중국서 “AI가 탄소중립 핵심”

최근 엔비디아가 새로운 GB200 그레이스 블랙웰 슈퍼칩을 엔비디아 GTC 2024에서 공개했다. 엔비디아 최초의 칩렛 구조 GPU인 블랙웰 GPU를 선보임과 동시에 무지막지한 성능·효율의 AI 슈퍼칩이 등장하며 시장을 압도했다.

사실상 에너지 전쟁 중이나 다름없는 데이터센터 및 하이퍼스케일러 간 AI 서버 시장에서 엔비디아가 강력한 폭탄을 터트린 셈이다.

■ 블랙웰 GPU, 전성비로 서버 시장 블랙홀 예감

▲

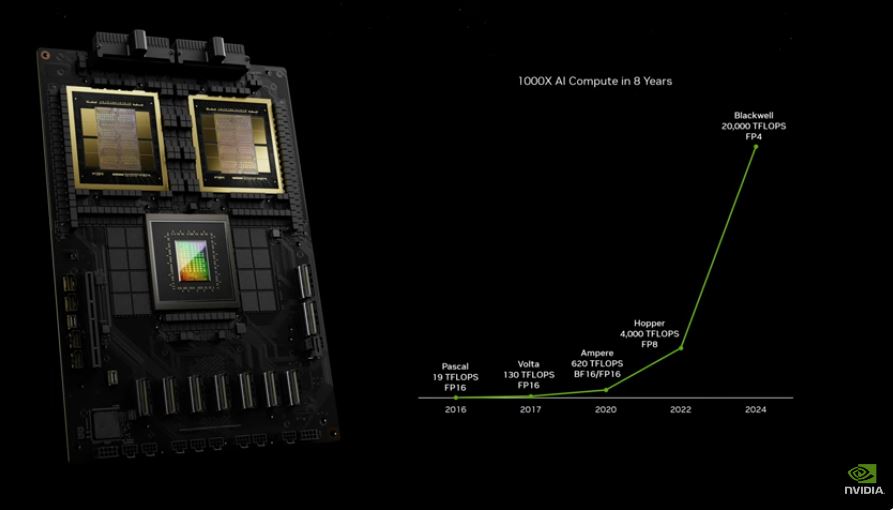

블랙웰 슈퍼칩, 8년간 1,000배 성능 향상(캡처:엔비디아 유튜브 채널)

엔비디아 블랙웰 GPU는 1,040억개 트랜지스터를 탑재한 단일칩 2개를 패키징해 칩렛 구조의 블랙웰 GPU 200(B200)을 탄생시켰다. 여기에는 5세대 고대역폭메모리인 HBM3e 8개가 탑재됐다.

B200 GPU는 이전 세대 H100 호퍼(Hopper) GPU 대비 AI 연산 성능이 FP4 5배, FP8 2.5배로 각각 증가했다. 젠슨 황 엔비디아 CEO는 “8년 전 19테라플롭스(TFLOPS) 파스칼과 비교할 때 블랙웰은 1,000배 성능 향상을 이뤄냈다”고 강조했다.

엔비디아는 여기에 더해 AI 서버용 솔루션으로 앞선 블랙웰 GPU 2개와 그레이스 CPU 1개를 NVLink 칩투칩(Chip-to-Chip) 인터커넥트 기술로 연결해 역대 최고성능의 AI 슈퍼칩 GB200 그레이스 블랙웰 슈퍼칩을 세상에 선보였다.

이 슈퍼칩 성능만 해도 상당한데 이 슈퍼칩 36개를 하나의 랙으로 연결한 엔비디아의 GB200 NVL72은 HGX H100 플랫폼과 비교해 에너지 효율성이 25배에 달하며, 1조개 매개변수 언어 모델에 대해 30배 빠른 실시간 LLM 추론 성능을 제공하며 역대급 전성비를 자랑했다.

■ 미래 AI 공장 데이터센터, AI 전성비=수익·탄소중립 직결

▲엔비디아 GB200 NVL72(사진:엔비디아)

산업계 인공지능 전환이 본격화되며 데이터센터를 중심으로 강력한 AI 연산용 서버 GPU 수요가 급증했다. GPU의 높은 전력소모는 AI칩에 대한 비용 만큼이나 고비용부담을 기업에 전가했고 이에 따라 전성비는 중요한 업계 화두로 떠올랐다.

젠슨 황 CEO는 “앞으로 데이터센터는 AI 공장으로 여겨질 것이며 이들의 목표는 수익을 창출하는 것”이라고 말했다. 젠슨 황의 발언 의도는 데이터센터의 목표가 인텔리전스를 창출한다는 것에 있지만, 한편으론 데이터센터 운영 기업 입장에선 동일 성능의 AI 플랫폼에서 더 적은 전력소모의 효율성을 바랄 수밖에 없다.

그런 의미에서 엔비디아 GB200 NVL72는 이전 세대 H100 대비 △LLM 추론 30배 △LLM 훈련 4배 △에너지 효율 25배 △데이터 처리 18배 성능으로 전력 효율화에 기여한다.

젠슨 황 CEO는 GTC 2024에서 엔비디아 GB200 NVL72의 전성비를 자랑했다. 1조8,000억개 파라미터 GPT 모델을 훈련하려면 25,000암페어로 약 3~5개월 걸린다. 호퍼 H100에서 이 작업을 수행하면 약 8,000개 GPU가 필요하며 90일 동안 대략 15MW를 소비하게 된다.

반면 블랙웰에서는 동일 기간 동안 단 2,000개 GPU로 이 작업을 마무리할 수 있다. 2,000개 GPU 소요 전력은 단 4MW에 불과하다. 더 적은 GPU를 채택해 25% 수준의 전력으로 작업할 수 있다면 전력효율과 더불어 공간효율까지 가져갈 수 있다. 이는 쿨링 시스템에 있어서도 중요한 고려점이 된다.

최근 데이터센터 업계는 공랭식 시스템 단점을 개선하기 위해 수랭식 방식을 도입해 전력사용효율성(PUE)에 집중하며 운영 비용 절감을 도모하는 추세이다. 액침식 냉각 기술도 개발 및 일부 적용되고 있지만 현재로서는 수랭식만으로도 충분한 효율이 나오고 있는 실정이다. GB200 NVL72 또한 액체 냉각을 채택해 효율을 도모하고 있다.

국제에너지기구(IEA)는 2024년 전기 보고서에서 2022년 전세계 데이터센터가 사용한 전력이 전세계 전력 수요의 2%에 해당하는 460테라와트시(TWh)에 이르며 2026년 소비량은 620~1050TWh까지 증가할 것으로 전망했다.

인공지능 확산과 맞물려 데이터센터의 전력 소비가 급증하며 효율적인 첨단 냉각 시스템 도입과 더불어 전성비 AI칩 솔루션에 대한 혁신이 지속될 필요가 있다.

■ 애플 팀 쿡 CEO 中 방문, “AI가 탄소중립 핵심”

현지시간으로 지난 24일 애플 팀 쿡 CEO가 중국발전포럼에서 열린 기후변화 토론회에 참석해 인공지능이 탄소배출량을 상당히 줄일 수 있는 핵심 기술임을 강조했다.

블룸버그 등 주요 외신에 따르면 팀 쿡 CEO는 “AI를 통해 기업이 개인의 탄소 배출량을 계산하는 등 탄소발자국 추적에 도움을 줄 수 있으며, 재활용/재사용 가능한 물질을 식별하고 기업에 재활용 전략을 제공할 수 있다”고 언급했다.

애플은 AI 개발에 상당한 투자와 자원을 쏟고 있다. 애플 전기차를 연구해온 ‘스페셜 프로젝트 그룹’이 해체 수순을 밟고 있으며, 애플의 해당 부서의 주요 연구인력이 AI 부서로 대거 이동할 것으로 전해졌다.

더불어 기본연봉 4억원을 내세워 AI 전문 인력 영입에도 나서는 등 적극적인 행보를 보이고 있다. 구체적인 투자 규모를 밝히진 않았지만 애플은 연초 AI 개발에 대규모 자본을 투입하고 있는 것으로 밝혔으며, 올 하반기 생성 AI 분야에서 새로운 출발을 알리는 애플의 중대 발표가 이뤄질 것으로 예고되고 있다.

팀 쿡 CEO는 이러한 주변 상황과 관련해 중국 기후변화 토론회서 오픈AI에 AI 경쟁에서 뒤쳐진 사실을 인정하면서도 더 많은 혁신을 위해서 AI 개발에 대규모 투자와 자원 투입이 진행 중임을 재차 언급했다.