UNIST 인공지능대학원 백승렬 교수팀이 창의적인 텍스트 프롬프트 기반의 조명 효과를 적용하는 인공지능 모델 ‘텍스트투리라이트(Text2Relight)’를 개발하며, 복잡한 편집도구를 쓰지 않고도 텍스트 입력만으로 ‘따끈따끈한 치킨’, ‘차가운 푸른빛’과 같은 언어의 감성까지 담아 사진이나 영상의 색감을 쉽게 보정할 수 있게 됐다.

UNIST, AI 모델 Text2Relight 기술 개발

텍스트 입력만으로 사진과 영상의 조명 효과를 연출하는 인공지능이 나왔다. 복잡한 편집도구를 쓰지 않고도 ‘따끈따끈한 치킨’, ‘차가운 푸른빛’과 같은 언어의 감성까지 담아 사진이나 영상의 색감을 쉽게 보정할 수 있게 됐다.

UNIST 인공지능대학원 백승렬 교수팀이 창의적인 텍스트 프롬프트 기반의 조명 효과를 적용하는 인공지능 모델 ‘텍스트투리라이트(Text2Relight)’를 개발했다.

이 기술은 텍스트 입력만으로도 사진과 영상의 색감과 조명 효과를 조절할 수 있어, 복잡한 편집 도구를 사용하지 않고도 ‘따끈따끈한 치킨’, ‘차가운 푸른빛’과 같은 감성적인 느낌을 쉽게 연출할 수 있다.

이 연구는 어도비와 함께 진행됐으며, 인공지능 3대 학회 중 하나인 전미인공지능학회(AAAI) 2025에 채택됐다.

연구팀은 오는 25일부터 미국 필라델피아에서 열리는 정기학회에서 이 기술을 발표할 예정이다.

텍스트투리라이트 모델은 창의적인 자연어 텍스트를 통해 색감, 밝기, 감정적 분위기 등 다양한 조명 특성을 표현할 수 있는 강점을 지니고 있다.

또한 원본 이미지를 왜곡하지 않고, 인물과 배경의 색감을 동시에 조절할 수 있다.

기존의 텍스트 기반 이미지 편집 인공지능 모델들은 조명 데이터에 특화되지 않아 원본 이미지가 왜곡되거나 조명 조절이 제한적이었던 반면, 이번 모델은 이러한 문제를 해결했다.

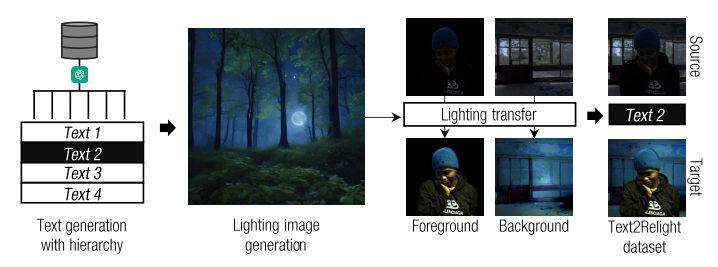

연구팀은 인공지능이 창의적인 텍스트와 조명 사이의 상관관계를 학습할 수 있도록 대규모 합성 데이터셋을 만들어 기술을 개발했다.

챗GPT와 텍스트 기반 확산 모델을 활용해 조명 데이터를 생성하고, OLAT 기법과 라이트닝 트랜스퍼(Lighting Transfer)를 적용해 다양한 조명 조건을 학습할 수 있는 대규모 합성 데이터셋을 구축했다.

또한 그림자 제거와 조명 위치 조정 같은 보조 학습 데이터를 추가로 훈련시켜 시각적 일관성과 조명의 현실감을 강화했다.

백승렬 교수는 “Text2Relight 기술은 사진과 영상 편집 등에서 작업 시간을 단축하고, 가상 및 증강 현실의 몰입감을 높이는 등 콘텐츠 분야에서 잠재력이 크다”고 설명했다.

이번 연구에는 UNIST 인공지능대학원의 차준욱 연구원이 제1저자로 참여했으며, 어도비와 과학기술정보통신부의 지원을 받아 수행됐다.