UNIST(총장 이용훈) 인공지능대학원의 백승렬 교수팀이 프롬프트 입력 창에 텍스트를 넣으면 손과 물체의 상호작용 동작을 생성하는 기술(Text2HOI)을 개발했다.

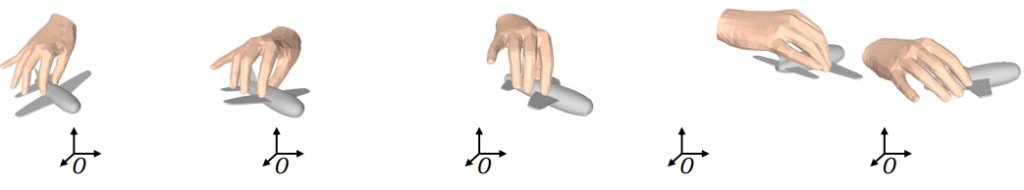

▲손과 물체의 상호작용 동작 생성 결과

UNIST 백승렬 교수팀, 텍스트 입력 손동작 예측·제어 가능

복잡한 초기 설정 없이도 단순한 텍스트 입력만으로 정밀한 3D 모션을 구현할 수 있는 기술이 등장했다.

UNIST(총장 이용훈) 인공지능대학원의 백승렬 교수팀은 프롬프트 입력 창에 텍스트를 넣으면 손과 물체의 상호작용 동작을 생성하는 기술(Text2HOI)을 개발했다고 19일 밝혔다.

단순한 텍스트 한 줄로 손과 물체의 복잡한 상호작용을 정밀하게 제어하는 기술로 향후 3D 가상현실 분야 상용화를 앞당길 것으로 기대된다.

이 기술은 텍스트 명령을 통해 물체를 잡고 놓는 동작과 물체와의 상호작용 동작 등을 구현할 수 있다. 가상현실(VR), 로보틱스, 의료 등 다양한 분야에 적용될 수 있으며, 복잡한 설정 과정이 필요 없어 누구나 쉽게 사용할 수 있는 것이 특징이다.

사용자가 입력한 텍스트를 분석하여 손동작과 관련된 명령 대상의 접촉 지점을 예측한다.

예를 들어 “사과를 양손으로 전달해라”라는 명령을 입력하면, 손과 사과 간의 가능한 접촉 지점을 확률적으로 계산한다. 이어 사과를 집어 올리는 동작을 취할 때 사과의 크기와 모양을 고려하여 손의 위치와 각도를 조절해 미세한 손동작을 구현한다.

이 기술은 의료 수술 절차를 시뮬레이션하거나, 게임과 가상현실에서 캐릭터 동작을 제어하며, 복잡한 과학 실험을 가상으로 수행하는 등 다양한 산업 분야에서 응용 가능하다. 로봇공학에서도 정밀한 손동작 제어를 통해 로봇과의 자연스러운 상호작용이 가능해질 전망이다.

백승렬 교수는 “Text2HOI 기술이 가상현실(VR/AR), 로보틱스, 의료 분야 등 다양한 분야에 적용될 수 있다”며 “앞으로도 사회에 도움이 되는 연구를 지속적으로 추진하겠다”고 밝혔다.

제1저자 차준욱 연구원은 “텍스트 프롬프트와 손과 물체의 상호작용 동작 생성 간의 관계에 대한 초석이 되어 앞으로 더 많은 관련 연구가 이루어지길 바란다”고 말했다.

이번 연구 결과는 세계적인 인공지능 학회인 Conference on Computer Vision and Pattern Recognition에 6월17일 온라인으로 게재됐다.

이 연구는 과학기술정보통신부(IITP), 한국연구재단(NRF), 과학기술정보통신부(MSIT), 해양수산과학기술진흥원(KIMST), 그리고 CJ 기업 AI 센터의 지원을 받아 수행됐다.